A/B testing and multivariate testing (MVT) are two different ways to optimize your website. Both let you test changes, measure the results, and make improvements based on what you learn.

Beyond that, these test types have almost nothing else in common.

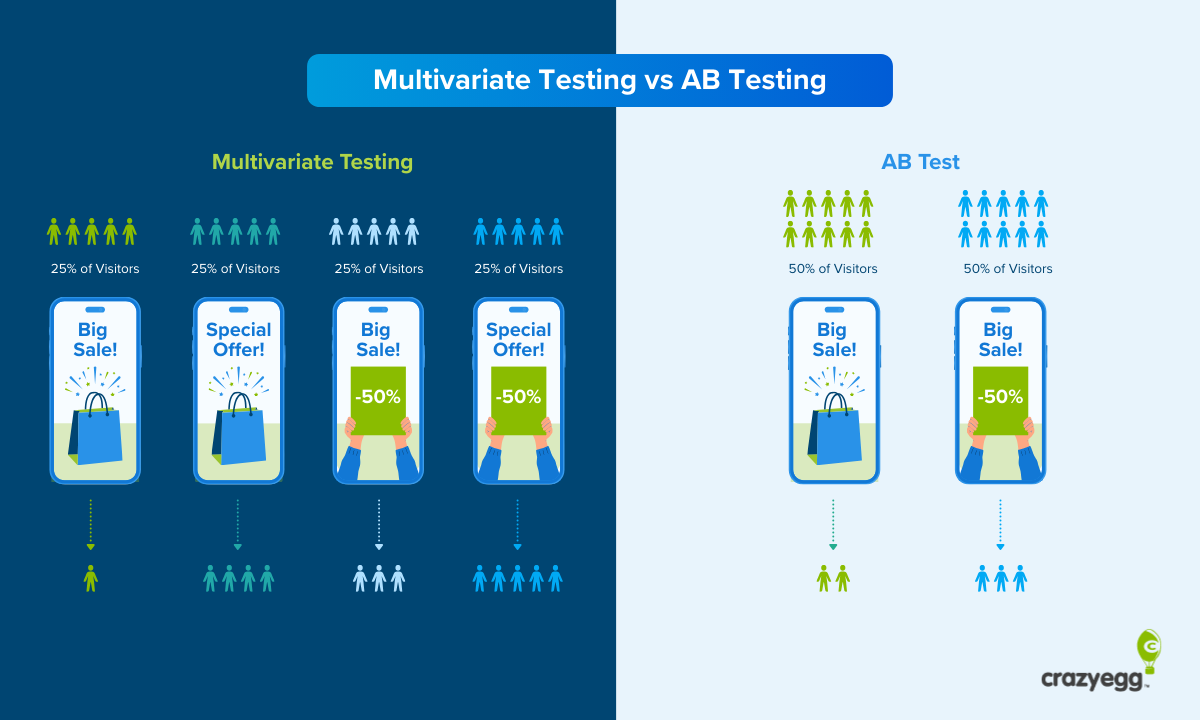

This guide starts with a cheat sheet that provides a quick, visual breakdown of multivariate testing versus A/B testing. The rest of the post walks through all the cheat sheet concepts in much greater detail, with plenty of examples.

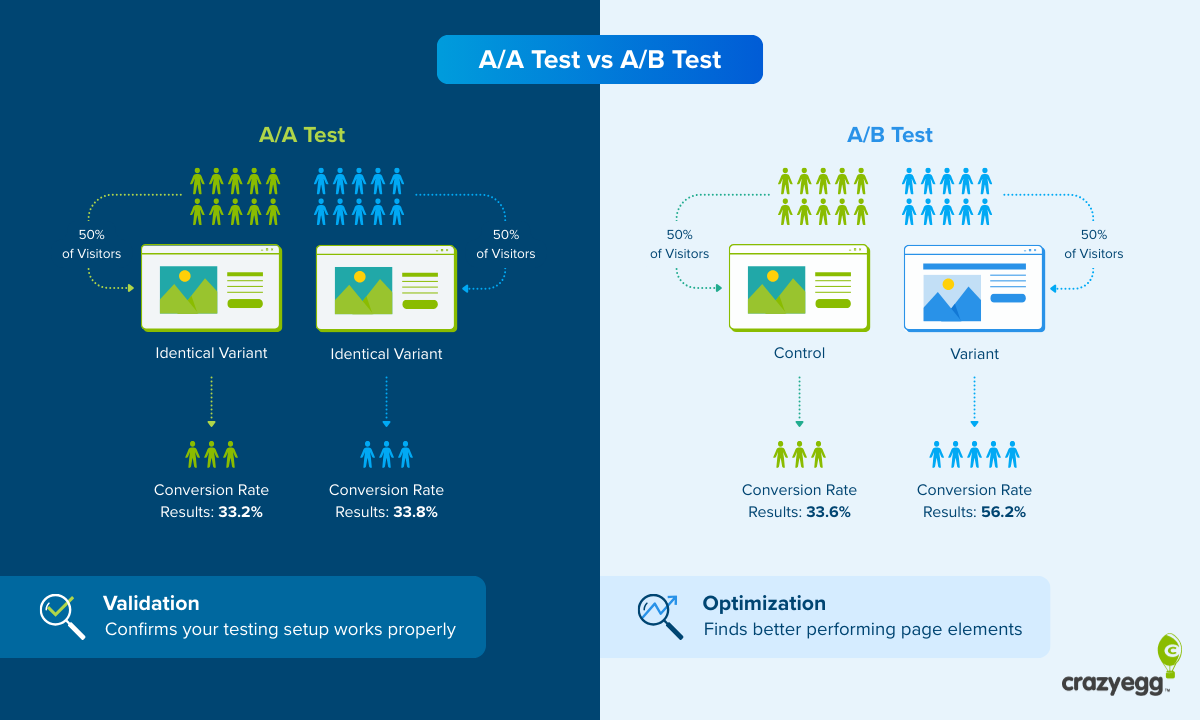

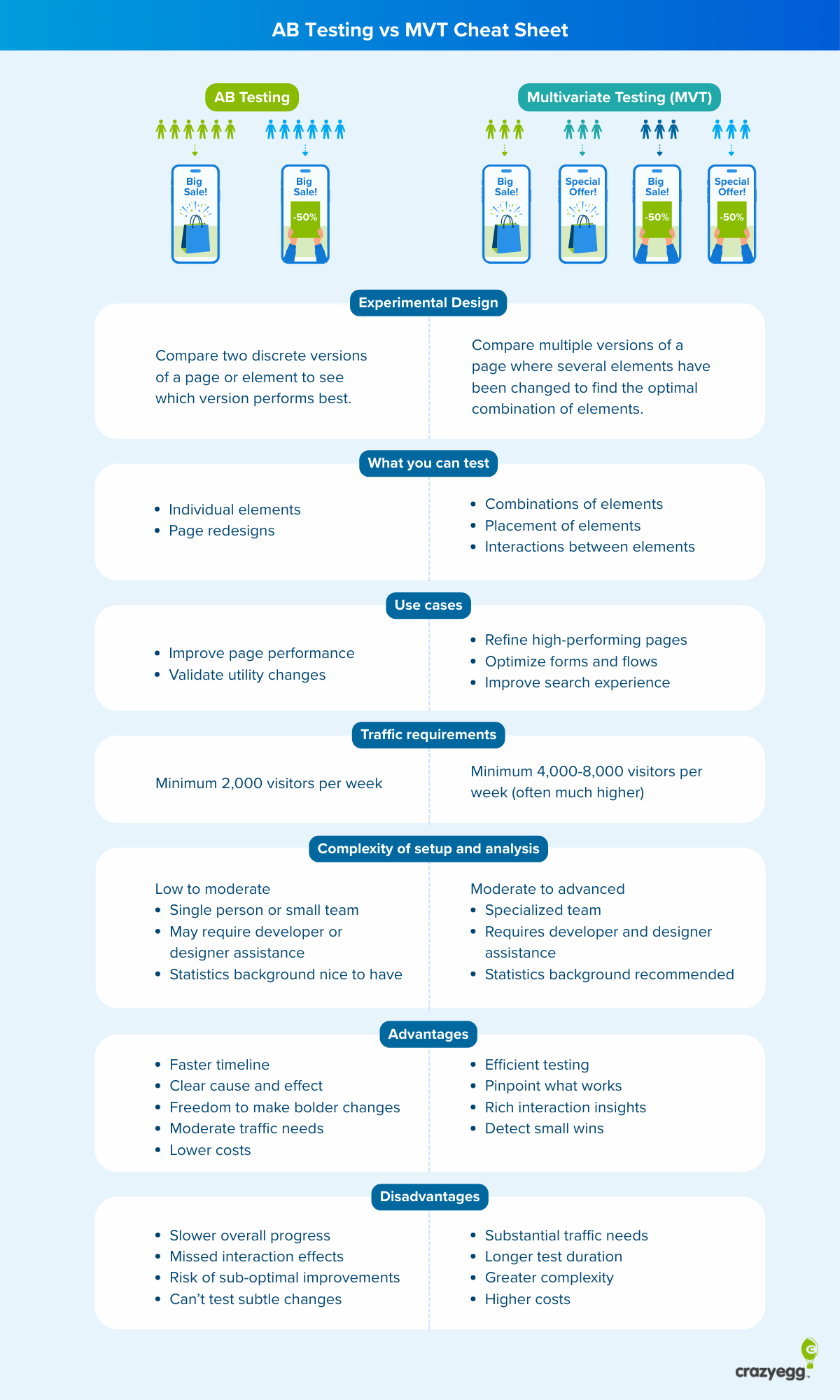

AB Testing vs MVT Cheat Sheet

This cheat sheet provides a concise summary of the important differences between AB testing and multivariate testing.

In-Depth Breakdown: Multivariate Testing vs AB Testing

Let’s walk through each of the sections from the cheat sheet in more detail. I’ll offer more comprehensive definitions, examples, and links to helpful resources.

Experimental design

A/B testing and multivariate testing are both controlled experiments that split randomized traffic between different versions of a web page. During the test, users interact with one of the versions and important website metrics are tracked.

Once enough users have been through the test, teams can compare the performance of different versions and decide which one is the best based on the metrics they care about.

Where A/B tests and multivariate tests differ is in terms of setup, complexity, and the kinds of decisions that they can help with.

At a high level, here’s how to think of the difference:

- A/B testing compares the performance of an individual element in isolation

- Multivariate testing compares the performance of multiple elements in combination

Let’s take a closer look at how each type of test is run, which will help you better understand how they are different.

During an A/B test, traffic to a web page is split evenly between the control (A) and the variation (B). The control is the existing web page, and the variation is a modified version of the control.

After a sufficient number of users have seen either the A or B version, the test is stopped and results are analyzed.

The core question for A/B testing is: which version performed better?

For example, you could run an A/B test to determine whether a new headline will outperform your existing headline.

If the new headline increases the conversion rate significantly during the test, you can implement the new headline and be confident that it will increase conversions in the future.

During a multivariate test, traffic to a web page is split evenly between a control and several variations in which multiple page elements have been changed.

After a sufficient number of users have seen all of the combinations of new elements, the test is stopped and the results are analyzed.

The core question for multivariate testing is: which combination of elements performed best?

For example, you could test two headlines, two images images, and two CTA buttons during the same test, splitting traffic to all eight possible combinations:

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|

| Headline | A | A | A | A | B | B | B | B |

| Image | A | B | A | B | A | A | B | B |

| CTA Button | A | A | B | B | A | B | A | B |

If a certain combination of elements has a significantly higher conversion rate than the others, you can confidently implement the winning combination.

If you feel like you need more background, check out this post that covers the basics of AB testing, or this post that walks through the basics of multivariate testing.

What you can test

A/B testing helps you compare the performance of individual elements or experiences.

Testing individual elements is often referred to as classic A/B testing. Traffic is split 50/50 between the existing page, and a variation where a single element has been changed.

Because the pages are identical apart from the one change, you know for certain that the change caused any meaningful difference in performance you see.

These types of tests allow you to experiment with changes to the existing elements on your page, or to introduce new elements and see what happens.

Say you have an opt-in popup to collect email addresses from website visitors. You could use A/B testing to find out:

- If a different incentive increases in signups (e.g. discount vs gated content).

- If you generate more signups showing the popup on arrival or using a 20 second delay.

- If using different persuasion techniques in your copywriting will increase conversions.

- If eliminating the popup has any impact on signups.

You can also use A/B testing to compare entirely different page designs. This is often referred to as split testing to distinguish it from classic A/B testing.

During a split test, you can change as many elements as you like, however you are still splitting traffic 50/50 between two distinct experiences, A and B.

Say you have a landing page that sells your product. You could use split testing to determine:

- If long-form content converts better than a short page.

- If a warm and friendly page design performs better than a sleek and corporate one.

- If a product-led page with screenshots and features performs better than a benefits-led page with outcomes and use-cases.

At the end of a split test, you won’t know the effect of any one element, rather you will know the aggregate impact on performance of the new experience vs the control.

Multivariate testing helps you compare the effect of multiple changes on a page simultaneously. You can test the performance of multiple combinations of elements and their placement with a multivariate test, as well as understand how the different elements interact.

Because of this setup, running a single multivariate test allows you to evaluate combinations of elements, understanding which headlines, images, and CTAs perform the best together.

MVT can also be used to understand placement effects, like learning where on the page elements have the most impact. For example, you could run a test where you place social proof at the top of the page, near the pricing, and near the CTA.

This allows you to find the optimal combination of and placement elements that perform the best for the metrics you care about.

Importantly, MVT enables you to account for interaction effects, such as learning that Headline A outperforms Headline B only when paired with Image B and CTA B. Or you might learn that Headline A performs worse every time it is paired with Image C.

If you were only running an A/B test, you could never discover these types of insights.

To sum up this section, you can test the performance of discrete elements or experiences with A/B testing, whereas MVT allows you to understand how the elements perform in different combinations.

Use cases

The use cases for A/B testing fall into two general categories: performance and utility.

Performance testing is the familiar use case where teams use A/B testing for conversion rate optimization (CRO) in order to find a version of their web page that delivers better results: more engagement, signups, sales, etc.

For example, teams might use A/B testing for landing pages to find headlines, images, and trust signals that increase the conversion rate. After the test, they implement the “winning” version and get more sales from the same amount of traffic.

While this type of CRO testing is what A/B testing is known for, it is not the only use case.

You can also use A/B testing to determine whether you can make useful changes to a page without hurting performance. I call this utility testing, and it includes changes that:

- Improve page load speeds

- Reduce build or maintenance costs

- Improve accessibility or user experience (UX)

During a utility test, no change in performance is good enough to count as a winner. As long as the change doesn’t negatively impact conversions, you are going to save time, money, or improve UX by making this change. That’s great!

For example, you could replace an auto-play video for a static image to speed up page load times. Or you could switch from a custom landing page to a simpler template that’s less expensive to produce or maintain.

So long as the new variation doesn’t negatively impact conversions, you can safely implement the faster, cheaper, or less labor-intensive variation.

Teams almost always use multivariate testing for conversion rate optimization. It’s not a practical tool for utility testing, though it is theoretically capable of being used that way.

One common use is to refine high-performing pages, especially after teams have discovered high-performing individual elements with A/B testing.

For example, when they already have a landing page that is working well, they will use MVT to experiment with different combinations of elements, layouts, and popup timing that might further improve page performance.

MVT is also a useful way to optimize flows and forms. In these situations, the completion rate depends on several elements working together across multiple steps.

Teams can experiment with different form fields, labels, and the field order, as well as show different microcopy and trust badges along the way. Testing all of these elements in different combinations reveals the best possible flow or form design.

Another common use of MVT is to improve search experience and offer the best possible tools and presentation of results for users looking for information on your site. Teams can experiment with different filters, sorting options, and results layouts to see what leads to the highest conversion rate down the line.

Traffic requirements

Both A/B testing and MVT require a sufficient sample size in order to reach statistical significance, which proves that the test results are not simply the product of random chance.

In plain terms, this means that you need to get enough traffic to the web page you are testing to ensure that a enough number of users see either the control or variations. (For a deeper dive on significance level, statistical power, and sample sizes, check out this guide to hypothesis testing.)

In my experience, we have only tested on pages that could get at least 1,000 visitors per week through all of the variations. Anything less than that made it difficult for our tests to reach statistical significance in a reasonable timeframe.

For standard A/B testing (a control + one variation), you need at least 2,000 visitors per week in order to detect valid differences in performance.

If you test additional variations (A/B/n testing), the traffic requirements increase proportionally with the number of different variations (e.g. 3,000 visitors per week to test a control + two variations).

With multivariate testing, traffic gets split across all of the combinations you are testing, which means the traffic requirements are much greater.

For example, testing 3 headlines, 2 hero images, and 2 CTA buttons, you would have 12 possible combinations:

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Headline | A | A | A | A | B | B | B | B | C | C | C | C |

| Image | A | B | A | B | A | B | A | B | A | B | A | B |

| CTA button | A | A | B | B | A | A | B | B | A | A | B | B |

This test would require 12,000 visitors per week minimum in order to test all possible combinations in a reasonable amount of time. If you want to additionally test two different popup timings during the test, you would require 24,000 weekly visitors.

Testing all possible combinations during MVT is known as full-factorial testing. One option that people use to decrease traffic requirements for MVT is to run a fractional-factorial test, which omits some of the possible combinations.

This can be done strategically. For example, if certain headlines and images don’t make sense when shown in combination, then it might make sense to omit those possibilities from the test.

The benefit of fractional-factorial tests is that you won’t have to split traffic among every possible combination. The tradeoff is that you don’t get the full picture. You could fail to discover important interaction effects or even the best possible combination.

Complexity of setup and analysis

A/B testing can usually be handled by one person who knows what they are trying to do. A marketer or CRO specialist without a ton of statistics background is fine.

It’s helpful if they can pull in a designer or developer to help them build variations, but good A/B testing tools make the setup and analysis very straight forward. You won’t have to worry about randomizing traffic, determining sample sizes, or any statistical calculations. Creating variations is done in drag and drop design editor, which does not require any coding.

From a strategy standpoint, A/B tests are relatively easy to design compared to multivariate tests. You just need one variation to test against your control. So long as you focus on high-impact elements (like headlines and CTAs) or major page design changes, your testing tool will report a clear winner if there is one.

Multivariate testing is harder to run without a team. A marketer or CRO specialist can lead, but with more variations to build, design and developer assistance are typically required.

Even a small design mistake could damage the integrity of the test, leading to invalid results. And, if you don’t catch the problem, then you might implement a change that leads to worse outcomes.

Designing tests is also more difficult. Along with more variations to build, each tested element has to make sense in combination with the others.

For example, you are limited in what your headline can reasonably say by the images you select, and vice versa. It takes a bit more thought to design a complete set of logically consistent combinations that it takes to create a single variation for an A/B test.

The analysis for MVT is also much more complex because you need to evaluate interaction effects. Without a background in statistics, it’s easy to miss important patterns, or worse, see patterns where they do not actually exist.

Another thing to consider, especially if you are using MVT to optimize flows and forms, is the need for a UI or UX designer on the team. It helps to have someone that understands interface design and how it impacts user behavior, both for building impactful variations and for drawing accurate insights from test results.

When you are testing headlines and hero images in A/B testing, on the other hand, most marketers are going to be great at designing good tests and making sound interpretations of the results.

Advantages

Here are the upsides of A/B testing and multivariate testing, relative to one another:

A/B testing

- Faster timeline: Running experiments with fewer variations allows you to reach significance faster and implement changes sooner.

- Clear cause and effect: Changing one thing at a time makes it obvious what drove any positive or negative changes you see in the results.

- Freedom to make bolder changes: With only a single variation, you can be more adventurous about what you test in terms of concepts, offers, and designs.

- Moderate traffic needs: More sites can use it on a wider range of pages, without waiting months for data.

- Lower costs: Requires less expensive tools, personnel, expertise, and has shorter testing periods.

A/B testing is great for speed and clarity. You get answers quickly and the results are easy to explain to people. If there is a winner, it’s clear, and the next steps are obvious.

Multivariate testing

- Efficient testing: Enables you to test multiple elements at once, which lets you evaluate several options with a single experiment.

- Pinpoint what works: Shows exactly how much each individual change contributed to the overall performance.

- Rich interaction insights: Identifies which elements work best together, which may be different from those which win when tested in isolation.

- Detect small wins: Reveals the impact of multiple minor elements which may not have a large enough effect to be detected with A/B testing.

Multivariate testing is ideal for a high-traffic page that’s already successful. You can test a wide range of small tweaks to quickly find the optimal combination of page elements, learning a lot about what your users prefer most along the way.

Disadvantages

Here are some of the downsides of A/B testing and multivariate testing, relative to one another.

A/B testing

- Slower overall progress: You need to run a series of A/B tests to get data on several elements or variations that MVT could compare in a single test.

- Missed interaction effects: You won’t learn when one element only works well when paired with another.

- Risk of sub-optimal improvements: Optimizing elements individually might not help you find the best possible combination

- Can’t test subtle changes: Minor changes won’t move the needle enough to reach statistical significance on their own.

As fast as A/B testing is to run, you are limited to a single variable (or page design), which means you have to run sequential tests to get data on more than one idea. And because you are testing individual elements in isolation, you can’t really understand how they interact.

Multivariate testing

- Substantial traffic needs: You may not be able to run MVT on certain pages or test as many combinations as you would like.

- Longer test duration: Even with a lot of traffic, splitting visitors across combinations slows down your timeline.

- Greater complexity: The design, setup, and management of testing is much more difficult, and it creates more room for error.

- Higher costs: Longer tests, more powerful tools, and specialized personnel make MVT significantly more expensive to run.

Multivariate testing is powerful, but it demands more traffic, time, and expertise. Its complexity and resource requirements make it much more expensive to run than A/B testing.

The Best Teams Use Both A/B and Multivariate Testing

The most profitable websites are testing constantly.

They are using A/B testing to discover the most tantalizing offers, and the most intuitive page designs, and the highest-converting page elements.

Once they have a page performing at a high baseline, they’ll use MVT to optimize how individual elements work together to support their goals.

And they know their market is constantly evolving. New tastes, new competitors, new products. To keep their sites performing at a high-level, they constantly monitor website analytics and conversion funnels for problems and opportunities.

The other thing that they do is segment their results. Yes, the overall conversion rate matters, but sometimes the most important insights come from digging into how different user groups interacted with your test variations.

The best teams are looking at traffic analytics to see how users from different sources interacted. Maybe a test idea strikes a chord with your traffic that’s coming from Facebook. Or maybe mobile users hate the new design but desktop users are converting at a much higher rate.

When you go to the trouble of running A/B testing or MVT, it’s worth going the extra mile to analyze the results at a deeper level. Sales matter. Signups matter. But if you can understand the why behind user actions (or inaction), that’s going to translate into wins far beyond the single page you are testing.