Brands that go to the trouble of conversion rate optimization (CRO) testing usually want to see increases to average order value and revenue per session.

It’s not enough to simply boost conversion rates. They are a key signal, sure, but the ultimate goal of CRO testing has to be focused on the real revenue-driving metrics.

Maintaining that focus is easier said than done during the CRO testing process, which plays out over months and requires resources from a few different teams. Here’s what you need to know to stay on track.

What is CRO Testing?

CRO testing involves running a series of controlled experiments designed to improve the conversion rate of a particular page.

Brands only do this on pages where conversions really matter.

Here are some of the typical pages that undergo CRO testing along with a quick example test to illustrate the types of experiments people are running:

- Landing page: Replace paragraphs with bullet points to improve form submissions.

- Product page: Add a “Related Products” carousel to increase average order value.

- Pricing page: Add a short FAQ section to address objections and boost conversions.

- Cart page: Show bundle recommendations to encourage higher cart totals.

- Checkout page: Remove optional form fields to reduce cart abandonment.

- Homepage/Nav: Add icons to the navigation menu to boost click-through rate to product pages.

Typically companies will focus on one page or page-type at a time, experimenting with a series of different tests on a single research question to find out what really works.

With each new experiment, the small conversion rate wins stack up. You learn which copywriting tactics and design elements work best. You may even find some huge wins by CRO testing different pricing schemes or bundling options.

Over time, you collect rich insights about what resonates most with your target audience and push them over the line to becoming paying customers.

CRO Testing vs One-and-Done CRO

This is a much more in-depth commitment than using CRO checklist to go through your site and make sure that it’s hitting the best practices.

I definitely recommend doing that to fix basic issues and ensure you are using all of the safe, proven, boring CRO tactics that just work.

But when you run a sequence of CRO tests that follow a strategic roadmap, you are able to do things like:

- Take bigger risks with your designs

- Gain mathematical confidence that CRO wins are not random flukes

- Find incremental improvements that compound

- Understand which elements impact conversions

- Validate wins under new conditions

This allows you to take your site beyond the CRO best practices that everyone else is using.

Ultimately, you’ll create a better experience for your specific users. You will learn things about what they need, what the market is not providing, and how you can help them.

How CRO Testing Works

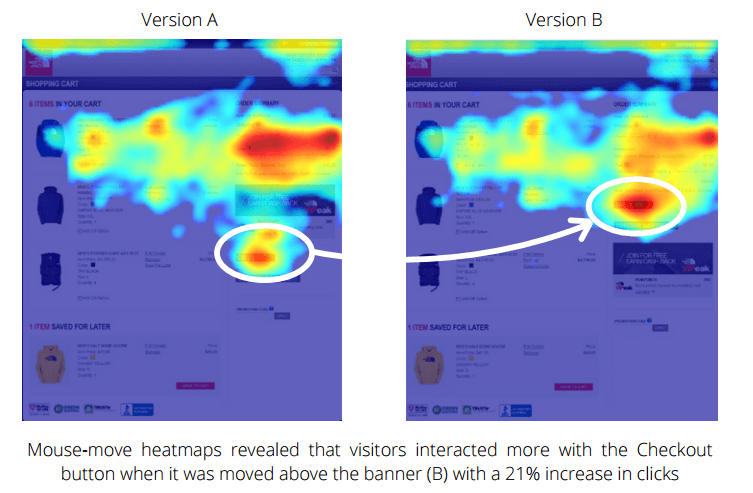

In a nutshell, CRO testing is pairing conversion rate optimization with hypothesis testing in order to find the best performing version of a page you care about. You set up experiments to test your assumptions and let the hard data guide your decision-making.

Regardless of the particular method you use to run CRO testing, the basic mechanics are the same.

- Split web traffic evenly between the control (existing page) and one or more variations (test pages).

- Let the test run long enough with a large enough sample size of users

- Compare performance of both pages against a specific conversion goal

If the variation outperforms the control, there’s a case to be made for implementing the new change. If the variation performs on par or below the control, it’s not worth making this change.

Continual CRO testing allows teams to try tons of different ideas, find the winners, and improve their conversion rates over time.

Most businesses use a specialized platform to run CRO testing that allows them to run tests on their site safely. These platforms enable non-specialists to build test pages quickly, and handle all the backend traffic randomization, traffic splitting, statistical analysis, etc.

Common Types of CRO Testing

Every platform allows you to run one or more of the following types of tests:

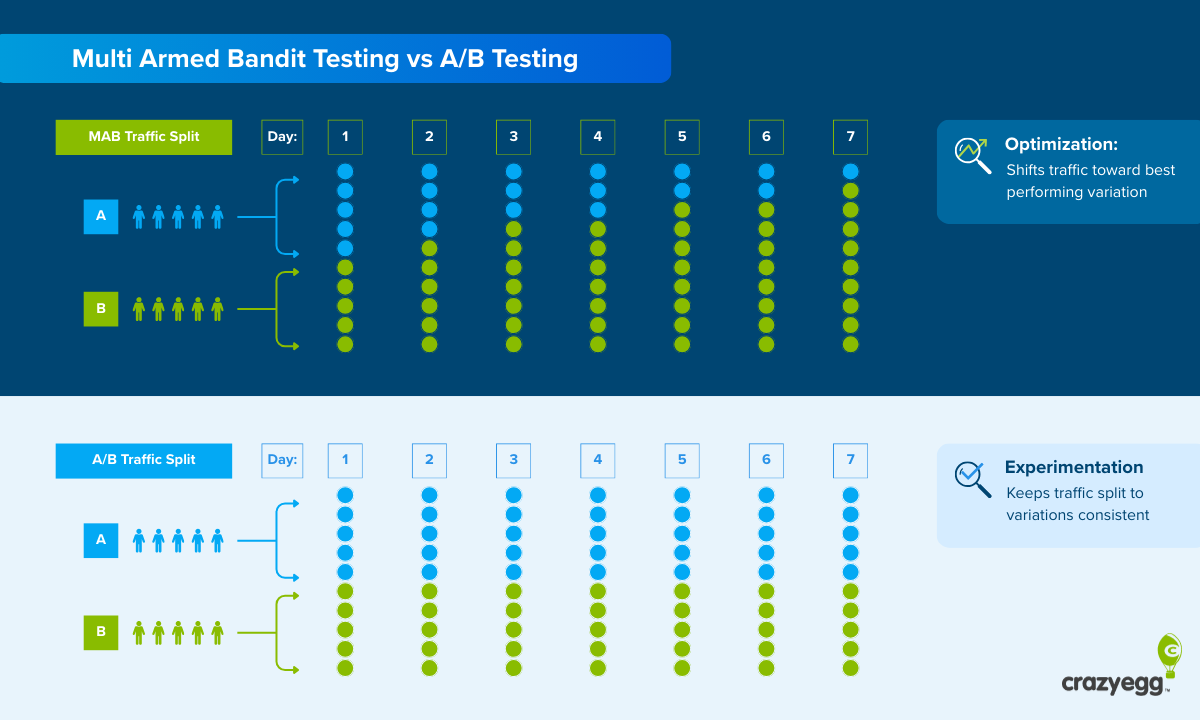

- A/B Testing: Traffic is split between two versions of a web page where a single element has been altered (A, the control, and B, the variant). Learn more about A/B testing.

- Split testing: Traffic is split between two versions of a web page where multiple page elements (or even the entire design) have been altered. Learn more about split testing.

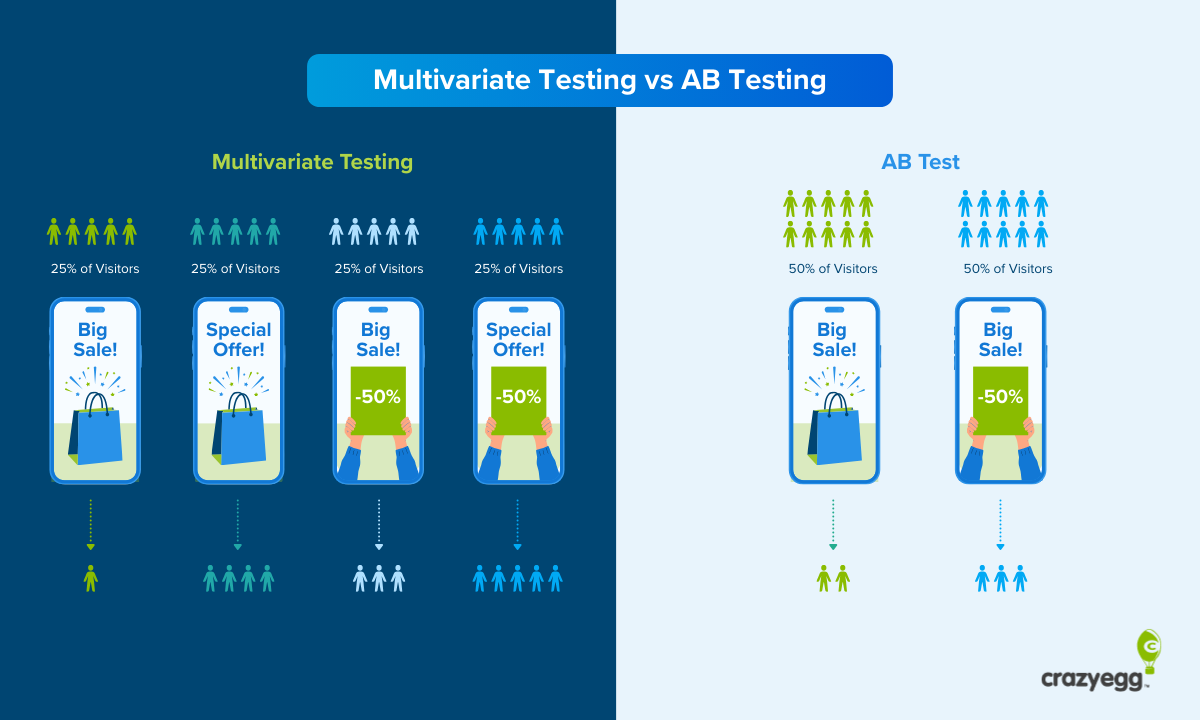

- Multivariate Testing (MVT): Traffic is split between 4+ versions of a web page where multiple elements have been changed. Learn more about multivariate testing.

In A/B testing and split testing, you are looking at head-to-head performance.

How did one page experience perform compared to the other?

With A/B tests, you can isolate individual elements and understand their impact, whereas split tests can look at the impact of larger structural changes, like different layouts or page templates.

Using multivariate testing, you can assess the performance of more than two page experiences simultaneously. It can help you understand how the different page elements work together, and compare the performance of multiple variations of a particular page element during a single test.

Which type should you use for CRO testing?

Start with A/B testing. This is easy to set up, relatively inexpensive, and all popular A/B testing tools enable people without a statistics background to run legitimate experiments.

Many A/B testing tools allow you to run split testing as well, which can be really helpful if you want to test more dramatic changes to a page.

If you have the traffic for multivariate testing (at least 20,000 sessions per month on the page you want to test), it’s definitely worth pursuing.

Business that have the traffic and resources routinely use all of these types of testing

8 Steps to Create a CRO Testing Roadmap

Creating this document might seem tedious, but it is absolutely vital.

Here’s why:

- It enables you to do better resource planning. Do you need to hire people to create test assets now (devs, designers, writers, etc) or can it wait a few months?

- It ensures you are focused on a business goal. You will know exactly how the tests you run tie into your tactical > strategic > business goals.

- It allows you to run more complex tests. Changing checkout page design to focus on free shipping or tweaking pricing requires buy-in you have to plan around.

- It keeps everyone accountable to the original mission. It’s easy to get pulled off on a side-quest based on interesting results.

I could go on, but any one of these reasons is alone enough to justify creating a roadmap.

Outlined below is a very simple step-by-step process for creating a CRO roadmap to guide testing.

1. Clarify your business goal

Start by answering a simple question: what long-term business goal do you hope to reach with testing?

For an ecommerce company, this might be: reduce shopping cart abandonment. That would definitely drive revenue.

It’s important that your business goal is specific, measurable, and that everyone agrees on how the goal is measured.

Let’s say you get tasked with using CRO testing to “increase brand engagement.” This is not very specific or measurable in a direct way.

There are a number of different customer engagement metrics you could certainly track (like social shares), and as long as everyone agrees on a specific metric, that’s fine.

The goal and metric you pick will guide all decisions about CRO testing. There is no reason to run a test that does not help you reach this goal.

2. List strategic goals to meet business goal

Drill down further: what are some strategies you can adopt to reach your business goal?

Let’s use the example of reducing shopping cart abandonment. Some strategies might be:

- Increase checkout completion rate

- Improve cart recovery email conversion rate

- Lower barriers to purchase

Think of these as different paths that lead to the same destination. If you can successfully enact a strategy that improves the checkout completion rate, for example, that will directly reduce the number of users who abandon their cart.

Make a list of all such strategic goals that will directly impact your business goal.

3. List tactical goals to reach strategic goals

Your tactical goals are actions you can take to reach your strategic goals. This is the basic plan of attack for enacting a strategy to hit a business goal.

For example, an online store using CRO testing could have have the following:

- Business goal: Reduce shopping cart abandonment.

- Strategy goal: Increase the checkout completion rate.

- Tactical goal: Remove distractions from the checkout page.

There are many, many different actions you can take to hit this tactical goal, such as:

- Reducing outgoing links

- Showing checkout progress

- Increasing visibility of trust signals and social proof

- Limiting form fields

These all are testable actions, specific changes that you can make to a web page and then measure the effect.

Because they tie back to your strategic and business goals, any positive effect you discover during CRO testing can be used to further those goals.

When you’re in the planning stage, it’s a good idea to follow a hierarchy of complexity when listing these tactical goals. Start by listing simple goals (like removing outgoing links) first before moving onto more complex, high-effort goals (like adding new types of social proof).

These tactical goals will be the foundation of your testing idea brainstorming session.

4. Find your existing performance baseline

Now that you have some sense of what metrics are going to matter for your test, you want to get a clear sense of how the pages you want to test perform today.

Some of the basic metrics you should get data on include:

- Sessions

- Conversions

- Conversion rate (total conversions / total sessions)

- Engagement rate (inverse of bounce rate)

- Exit rate

You should have this data split out for desktops and mobile (and tablets, if they form a significant share of page traffic).

Other businesses, especially ecommerce businesses, will want to get a sense of other metrics, such as:

- Add-to-cart rate

- Cart abandonment rate

- Refund/return rate

- Average order value (AOV)

- Revenue per session

For an ecommerce business, AOV might be the key metric. Better to see that number rise because it means you are making more money on every order if your company faces high shipping costs.

Figure out what metrics matter and then understand where they are today. That baseline matters a lot more than any sort of general data about average conversion rates.

5. Brainstorm testing ideas

With an accurate idea of where individual page performance stands today, you will have a good sense of the magnitude of change you need to see in order to reach your goals.

The next step is to brainstorm ideas to enact each tactical goal.

These ideas need to be appropriate for the type of CRO testing you are running.

When AB testing, for example, any proposed ideas should be specifically focused on a single page element.

If you are multivariate testing, on the other hand, you could pick several elements and different layout ideas to test at the same time.

Regardless of the method, you want to make sure that any test idea has a high-likelihood of helping the page reach the specific tactical goal. This takes a mix of common sense, market research, and imagination.

For example, if your tactical goal is to “use social proof on product page,” you might brainstorm ideas like:

- Add testimonials from customers on product page

- Show total number of social media fans

- Show number of purchases in real-time

These are all very common persuasion techniques that brands have used for decades to leverage the power of social proof.

The best way to find unique ideas to test is to talk to your existing customers. Run surveys on your site and hear directly from your users about what they would like to see, where your site falls short, why they didn’t purchase.

6. Score and prioritize tests

The next step is to figure out which tests to run first.

There are two approaches to do this:

- Resource-first approach: Take stock of your existing resources and figure out which tests you can run with them. For instance, if you have an in-house copywriter, you can prioritize testing headlines and sales copy before testing other elements.

- Results-first approach: Prioritize tests based on their expected results (based on similar case studies and historical data) and go about acquiring resources accordingly.

Regardless of which approach you choose, the key to success and staying organized is making good judgments about which tests are going to be easier/harder to run, and which tests have a larger/smaller opportunity.

There’s no perfect way to score tests, but this two-factor system has worked for me in the past:

1. Ease of implementation (1-10)

This is simply a measure of how easy the test would be to implement based on

- Your existing resources

- Your ability to acquire future resources

- The technical complexity of the test

Give each test a numerical value on a scale of 1-10 with 10 meaning “very easy” and 1 being “very hard”.

For example, if you have an in-house copywriter, testing a headline would be a “10”. If you had to hire a freelance copywriter, your difficulty score would be “6”.

More complex tasks, such as adding a highly customized opt-in popup, would get high difficulty scores.

2. Impact

This is a measure of the expected impact of the test on your target metric based on:

- Historical data: Whether similar changes to your site in the past have yielded a net positive impact on the target metric

- Case studies from similar businesses in the same industry.

- Test target: If you’re testing to improve CTR for a CTA, changing CTA copy would have a more immediate impact than adding a disclaimer to the page footer.

Score the impact on a scale of 1-10 with 10 being “very high impact” and “1” being “low impact”.

For example, if historical data and case studies show that adding social proof to an ecommerce product page improves results considerably, you’d classify it as an “8”.

On the other hand, adding trust signals, simplifying menu navigation, and other important elements to improve are less likely to deliver monumental gains.

Ideally, you want to prioritize tests that are easy to implement and have a high net impact on your success.

7. Develop your testing hypothesis

Once you’ve prioritized your tests, the next step is to formalize your experimental design by writing a clear, testable hypothesis. A good hypothesis ties your tactical idea to a strategic goal, with a specific prediction about user behavior.

Here’s an example:

“We believe that adding customer testimonials to the product page will increase the conversion rate because social proof builds trust with prospective buyers.”

This kind of hypothesis focuses on a single change (adding testimonials) and defines both what you’re testing and why.

It’s important to remember that one CRO test can’t validate a broad concept like “social proof builds trust” in general. It only evaluates the effect of one particular page experience vs another.

Most businesses use A/B testing to evaluate one variation of one element at a time. That means investigating even a single tactical goal usually requires multiple hypotheses and multiple tests.

For example, you might want to test:

- Making the CTA button larger

- Changing it to a more contrasting color

- Adjusting the button’s placement or style

Each of these requires its own hypothesis and test, even though they all support the same underlying goal.

8. Create your CRO testing roadmap

At this point, you have everything you need to start building out a useful CRO roadmap.

This document will be essential for planning, creating tests on schedule, and staying organized during testing. It also makes it easier for you to review progress and share results.

I would put this in a spreadsheet unless you have a really good reason not to, and include columns for:

- Experiment name: Use an exact but descriptive name. Say, something like “Product Page CTA Color”.

- Experiment description: Describe the test in detail. Note what you’re doing on the page and how version A/B differ.

- Hypothesis: I would include the full hypothesis statement you created.

- Collateral: List and link to any marketing collateral, such as version A/B design here.

- Target page: The URL of the page you’re testing.

- Ease-of-implementation: List the score as a numerical value.

- Impact: List the score as a numerical value.

- Status: Use a single select drop down to denote the current stage of testing (i.e. planning, building, testing, complete)

- Existing conversion rate: The typical conversion rate of the page today

- Target conversion rate: The conversion rate goal for the test

Additionally, I’d include any other key metrics that are tied to your business goals. Things like AOV or revenue per session are often more important metrics than conversions.

Tips for Getting Started with CRO Testing

If you have never used the testing platform before, I would run a test experiment on a page that you don’t care about before you run your first real test.

It’s better to work out any kinks in the testing process on some unseen page as opposed to on your pricing page with live traffic.

Once you feel good about the platform, I would start with tests that have high impact and ease of implementation scores.

Then you have to wait at least two weeks for test results to come in.

Use the downtime to look ahead to the high-impact, high-effort tests. Which resources will you need to build out those tests? Do you need developers, writers, or a sign-off from the sales team that’s going to take a few weeks to approve?

Make sure that you update the testing roadmap with the results as they come in.

When you start out, most of your test ideas are going to be based on looking at competitor strategies, reading case studies, investigating your site’s web analytics, and general market research.

As you progress through CRO testing, you will start to get really good data on specific pages that either supports or challenges these assumptions.

For many CRO efforts, it’s the lessons learned along the way that result in the most dramatic wins. Being able to respond to fresh data as it comes in and tweak your test ideas in light of these insights is crucial.

This means you can’t build too many tests ahead of time.

I think it’s a good idea to have one test built and ready to go per page that you are testing. That way, if something breaks or the test results are complete trash, you have a test ready to go in the hopper.

But if you start to build out tests far in advance, it’s going to result in a lot of wasted effort because the tests won’t incorporate the latest results. Sometimes you can tweak a pre-built test before you run it, but it’s not always possible.