Nobody knows what’s going to work. Even the smartest people are consistently bad at predicting outcomes.

This is why you run A/B testing, which is nothing less than using the scientific method to investigate whether an idea works. It’s used successfully in drug discovery, manufacturing, and yes, optimizing conversion rates on websites.

But increasing sales and signups is hardly the only benefit of A/B testing. And it’s not the most important one, either.

Beyond the Cliché Benefits of A/B Testing

Researching this post, I found about 70 articles that identified the same benefits like increasing conversion rates, driving engagement, improving ROI, and reducing bounce rates.

It’s true. Those are all real benefits of A/B testing . . . that come with months or years of diligent work.

But if you read those articles, they make it seem like you run one test and get massive gains the next day.

Even some of the big name A/B testing platforms had posts like this.

Come on. Of all people, they should know better.

I’ve only run several hundred A/B tests, which makes me a rookie compared to the talented people at Google, Amazon, AirBnB, and other massive brands with teams that run thousands of experiments a year.

But I can tell you right now that if you go in expecting A/B testing to deliver huge conversion lifts by testing a few ideas, you are going to be disappointed.

The truth is you can increase conversion rates, drive engagement, and do all of that with A/B testing. There are plenty of examples of A/B testing where teams find monster wins.

But if you go into it with a clear-eyed sense of the work it’s going to take, then you are going to come out with wins that go far beyond the conversion lifts.

7 Realistic Benefits of A/B Testing

I’m just going to jump right into the benefits in this section. If you are at all unclear about the mechanics or purpose of randomized controlled testing on websites, review this post on the basics of A/B testing.

1. It proves whether your change worked

You can be confident you understand causation

Website metrics move for all sorts of reasons that have nothing to do with changes you made on your site. First of all, there is just randomness in the world. If you track clicks and conversions, you’ll see them go up and go down, even when you change nothing about your site.

Then you have to factor in your competitors, who are running sales, buying ads, and doing their best to get in front of the same audience. That’s going to influence the traffic that shows up on your site and how it behaves.

So if you make a change to a page and see that conversions went up, you cannot say for certain that it happened because of your change.

A/B testing is the only way to remove all the noise and uncertainty. It compares two versions of your site at the exact same time:

- Half the visitors see your existing page (the control)

- Half the visitors see the modified version (the variation)

Any outside factor like seasonality or competitor campaigns affects both of those groups equally. This allows you to make a valid comparison of the two versions.

If the variation performs better, then you can be confident that the change you made is responsible for the improvement. If the variant performs worse, you can be sure it was not effective.

Whether you see improvement or decay in the results, the beauty of A/B testing is that you know the cause.

Bear in mind there are still ways that noise can creep into A/B test results. You have to be alert to faulty test designs, sample ratio mismatch, tracking issues, and other problems. I’ll highlight those issues and safeguards to prevent them in the tips section.

2. It lets evidence drive your strategy

You can make decisions based on data instead of opinions

Website changes are influenced by factors that shouldn’t matter, like seniority, personal taste, egos, and company politics.

None of these factors should influence decision-making, but they do. You know it. I know it. And we both know that it comes up a lot in marketing and brand decisions where there’s no single “correct” answer.

A/B testing is a wonderful check on all the opinions that work their way into our website strategy without being questioned. You can test them and determine whether they are actually true!

Instead of going on someone’s, “I know a good website when I smell it,” intuition, you can move forward based on data. If an idea worked well, run with it. If it didn’t, cancel it. Who’s idea was it? Doesn’t matter.

Running A/B tests is also a great way to challenge assumptions and cookie-cutter best practices that may not hold true on your site.

- Is cheaper always better? Not for some types of buyers, and maybe that’s who you really want to convert.

- Should we minimize form fields as much as possible? Usually, but sometimes it will lower the quality of the leads you generate and waste your sales team’s time.

- Does every page need one and only one call to action (CTA)? Of course not, you can have one primary CTA for high-intent users and a secondary one to pick up emails from people who aren’t ready to pull the trigger yet.

Maybe adding some friction to your form results in generating higher quality leads. Maybe not. Without testing the idea, you can’t know for sure.

With A/B testing, you can get data on how real users respond to your ideas. Then you can make decisions about what to do next based on hard evidence.

3. It lowers the risk of disaster redesigns

You can avoid spending months on building something that hurts performance

My old boss paid a talented agency with a great track record $400,000 to help him rebrand one of their sites. It tanked so hard that he had to go in and manually restore the old site within a week.

Everyone on his team, himself included, loved the re-design. They felt confident it was going to be awesome, but it was a total failure.

Since then, he’s been a huge proponent of A/B testing ahead of major pivots, breaking big changes down into smaller testable parts, and making adjustments along the way. This has multiple benefits, like:

- Reducing risk: You can stop earlier in the process if something hurts performance.

- Minimizing waste: You can focus your efforts on changes that help performance and stop working on failed ideas quickly.

- Better attribution: It’s hard to know which part of a big redesign helped or hurt performance.

This approach is slower, but it’s way less risky, and you can be a lot more confident about which changes led to which results.

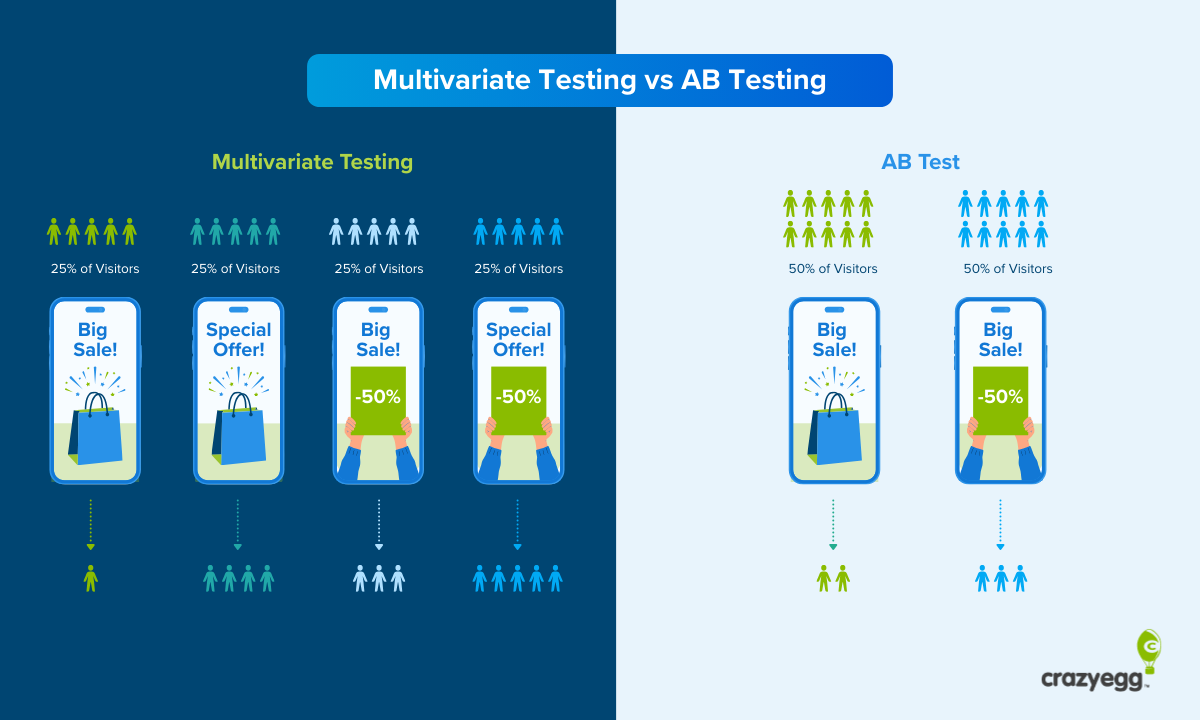

If the page has a ton of traffic, you can also consider multivariate testing, which lets you change several page elements at the same time. You’ll be able to find the best combination of elements and see how they interact.

Regardless of the method you use, testing your way into a big change is so much safer than just deploying it blind.

4. It gives you confidence to try risky ideas

You can take bigger swings because there is a kill switch

Many teams don’t want to touch high-stakes pages that are working well enough. “If it ain’t broke, don’t fix it,” so the old saying goes. But what if you are leaving a ton of revenue on the table by letting a page sit there with only minimal updates?

With A/B testing, you have a way to experiment with new ideas on high-value pages safely. You can test different versions of individual elements, or even use a split test to try a completely new redesign and see what happens.

You don’t have to send half your traffic to the new design. Test it with 10-20% of traffic if you are worried, though it will take a bit longer for the test to gather enough data. If you have the option, you can use a multi-armed bandit test to minimize your losses.

If it works, great, you just found a win on an important page. That’s a big deal. If not, you can kill the test and know that most of your visitors never saw it. The downside is contained.

5. It lets you make cost-saving changes safely

You can make website improvements without risking sales

One of the most profitable ways that I have used A/B testing is to validate that we can make a useful change to our website that won’t hurt conversions.

Let’s say that we have a page with an interactive feature that is a huge pain to maintain. It costs time and money for us to keep this feature functional and up-to-date. What if we replace the feature with something cheaper and easier to maintain? That would save us money every month.

With A/B testing, we could experiment and find out whether or not we can get rid of this costly feature without hurting the conversion rate.

In these sorts of tests, you just need to make sure that you get flat results, i.e. no change in performance. That’s good enough for a win in a case like this. As long as sales don’t drop, we can make this change and increase the page’s profitability.

The same framework applies for testing changes that improve UX, decrease technical debt, make your page more accessible, and speed up your site. These are all wins in their own right, and some, like making your site faster, will often lead to an increase in conversions as well.

6. The knowledge you build keeps paying off

Over time, you learn what tends to work for your users

After running enough tests, you start to build practical knowledge about your target audience, getting a feel for:

- Which type of changes tend to help?

- Which types of changes tend to do nothing?

- What types of copywriting resonate?

- Which persuasion techniques are effective?

These sorts of insights help you design better tests in the future, building off a record of what’s worked and what hasn’t.

Bear in mind, this library of knowledge won’t build itself. Teams need to take an active role. That means documenting test results in some sort of database that is keyword searchable.

People are going to come and go. You will amass an insane volume of test data very quickly, and without storing it in a useful way, you run the risk of forgetting learnings and running repeat tests.

7. Incremental wins add up to meaningful outcomes

You can find small wins that stack up on a page and across a funnel

I wish that you could change the button shape and watch your revenue triple, but that’s the fantasy, not the reality of A/B testing.

The big wins I have seen from A/B testing came from less dramatic experiments where we made pages slightly better over many tests.

We found better headlines with SEO A/B testing that drove a little bit more traffic to our site. We improved value propositions, images, layouts, seeing little 2-3% bumps in clicks and conversions. Each win pushed our baseline performance up a little bit.

Six months in, we’re looking at 20% increases to the conversion rate from where we were. There was no single genius test that got us there.

This is how A/B testing winds up benefiting the teams that stick with it. They find small wins on their landing page, forms, confirmation pages. Little by little each step of their website funnel has better conversion rates, completion rates, fewer drop-offs, and the end result is massively increased revenue.

Tips To Get The Most Value From A/B Testing

This section is a list of ideas and tactics you can implement to make sure that you are setting your team up for success with A/B testing and finding genuine wins on your site.

Here’s the tips we’ll cover:

- Lock in a clear hypothesis

- Account for Twyman’s law

- Track countervailing metrics

- Watch for sample ratio mismatch

- Document test results

- Test a mix of small and big changes

- Create a culture of experimentation

Lock in a clear hypothesis

When you run an A/B test, it’s imperative to state your hypothesis before running the experiment. This stated hypothesis is what you test and how you judge results. It cannot be changed after the results come in.

This is because A/B tests produce tons of data, which can be broken into different segments like device type, traffic source, time of day, day of week, etc. Without stating ahead of time exactly what you are testing, a team lead who desperately wants to find a win can easily cherry pick results from random clusters in the data.

This is a classic example of the Texas sharpshooter fallacy, in which someone fires shots into a barn and then draws their target around clusters of bullet holes. Because they chose the target after shooting, they have fooled themselves into thinking they are a sharpshooter.

The same thing happens with A/B testing, where you can slice and dice the data to make it appear like an experiment was a winner.

“We saw conversions increase among weekday mobile users in Europe,” someone might claim after finding that pattern in the data. But if they did not explicitly set out to test that idea, then they are simply pointing to a random cluster like the old Texas sharpshooter.

If they really believe that this is a win, and that segment really is worth going after, then the solution is to re-run the test with that specific hypothesis stated at the outset.

Check out this post on hypothesis testing to learn more about writing clear hypotheses and why it is so important for running valid tests.

Account for Twyman’s law

There’s a principle in data analysis, attributed to media researcher William Anthony Twyman, that states, “Any figure that looks interesting or different is usually wrong.”

He didn’t say it was always wrong, but a really interesting or far-out result is definitely worth a second look.

So if you typically see small conversion lifts from your A/B tests and then suddenly you see a 23% conversion lift, your first instinct should be to investigate the result.

Was traffic truly randomized? Is there a broken script? Is there some external factor that impacted one of the groups?

You can get awesome results using A/B testing, but treating them with a little bit of extra skepticism is healthy. The cost of implementing a false win is really high.

Track countervailing metrics

Say you want to increase the amount of revenue generated on a page. Okay, display more ads. That should increase revenue.

But is there any more downside to more ads? Of course. You and I have both logged on to pages where we are bombarded by ads, and we never go back to that site. Short-term, they gained some revenue, but over the long-term that strategy will destroy their site.

This is why you should track more than just your primary metric (like purchases, revenue, or conversions) to make sure that any wins you get from testing don’t inadvertently create a loss somewhere else.

This idea comes from Ronny Kohavi, one of the most experienced A/B testers in the world, who has literally written the book on it. His belief based on looking at years of data is that it’s easy to find short-term wins that are not actually good for your business. By picking a countervailing metric ahead of testing, you can ensure that your wins won’t negatively affect your business over the long-term.

Some examples of useful countervailing metrics include:

- Customer retention: Do users return after you make the change?

- Customer lifetime value: Are you attracting customers who stay longer and spend more?

- Ratings and satisfaction scores: Are you making users happier or more frustrated?

- Lead quality: Are you getting more leads who turn into opportunities or buyers?

It’s easy to make a form shorter and drive up the number of people who complete it. But if you are keeping tabs on lead quality, you might see that your new form is generating trash leads that waste your sales team’s time. That’s no good.

Similarly, you could offer a massive discount to get more people to convert, but if they wind up being one-time customers, it’s probably not a long-term profitable strategy. By keeping tabs on customer lifetime value, you will be able to track this issue. If you only look at the conversion rate, you would get into trouble over time.

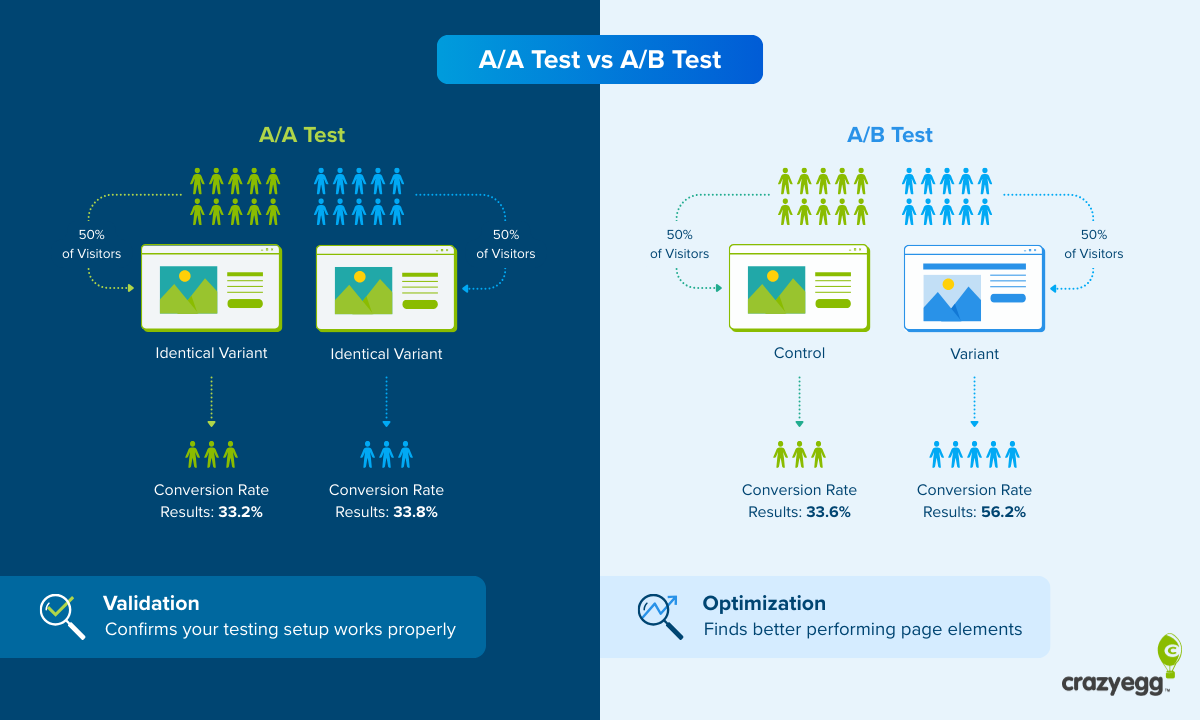

Watch for sample ratio mismatch (SRM)

The validity of an A/B test relies on the randomized split of traffic going to the different variations. If that split is not truly random, then the results are not valid and you need to re-run the test.

This error is known as sample ratio mismatch (SRM). For example, say that a much higher percentage of returning users were shown the variation than new users. That’s going to skew results. Same thing with a higher percentage of mobile users in one group vs. another.

SRM can cause a difference in results, and if you don’t catch it, then you will be fooled into thinking your change caused the outcome.

One of the biggest red flags of SRM is a difference between the actual traffic and expected traffic split. For example, you might see that 52% of users viewed the control and 48% of users viewed the variation. You expected a 50/50 split, but that’s not what you see. It’s worth digging in to see whether traffic sources, device types, or other segments of users were split unevenly.

Good A/B testing tools have built-in safeguards to avoid SRM or, at the very least, flag it when it occurs. But the tools are not perfect, so your team should be aware of it and how to assess whether or not SRM is polluting the results.

Document test results

There is a ton of value in keeping a clear, searchable record of test results. It’s a bit of extra work, but without documentation, you run the risk of repeating the same ideas and having to relearn lessons, both of which are wasteful and expensive.

You don’t need to write long reports, just a simple document or spreadsheet entry that captures:

- What you changed

- Why you tested the change

- The metrics you tracked

- The results

- Whether you shipped the change or not

With this information, anyone on the team can quickly discover whether or not you have run a similar test in the past. This avoids retesting ideas, which is easy to do when you are running lots of tests over the course of several months or years.

It also makes it easier to pick up on patterns. What does your target market tend to respond well to? What seems to fail every time?

And it helps you improve your testing roadmap. When you can look back at past results, you can plan better tests that are built off evidence from past results.

Test a mix of small and big changes

Small, low-risk tests where you are refining a page and reducing friction are great, but you want to consistently work in some higher-risk, higher-reward tests as well.

Doing small tests is safe, but without testing more adventurous changes you put a lower ceiling on what testing can achieve. Without taking big swings, you aren’t giving yourself a chance to discover new designs, positioning, or messaging that could really move the needle.

Big changes usually fail, so it’s important to have a steady stream of small tests running. This way you can keep learning and finding incremental wins while you search for that big result.