Multi-armed bandit testing is often pitched as a faster, more profitable version of A/B testing.

There’s some truth in that idea, but it’s misleading to think you could simply upgrade A/B testing by switching to bandits.

In many situations, A/B testing is more likely to produce value over the long run. There are also scenarios where you could leave a bandit test running indefinitely, which you would never do with an A/B test.

These are very different testing methods. This post breaks down what each one does well, and when to use them.

The Key Difference Between Multi-Armed Bandit and A/B Testing

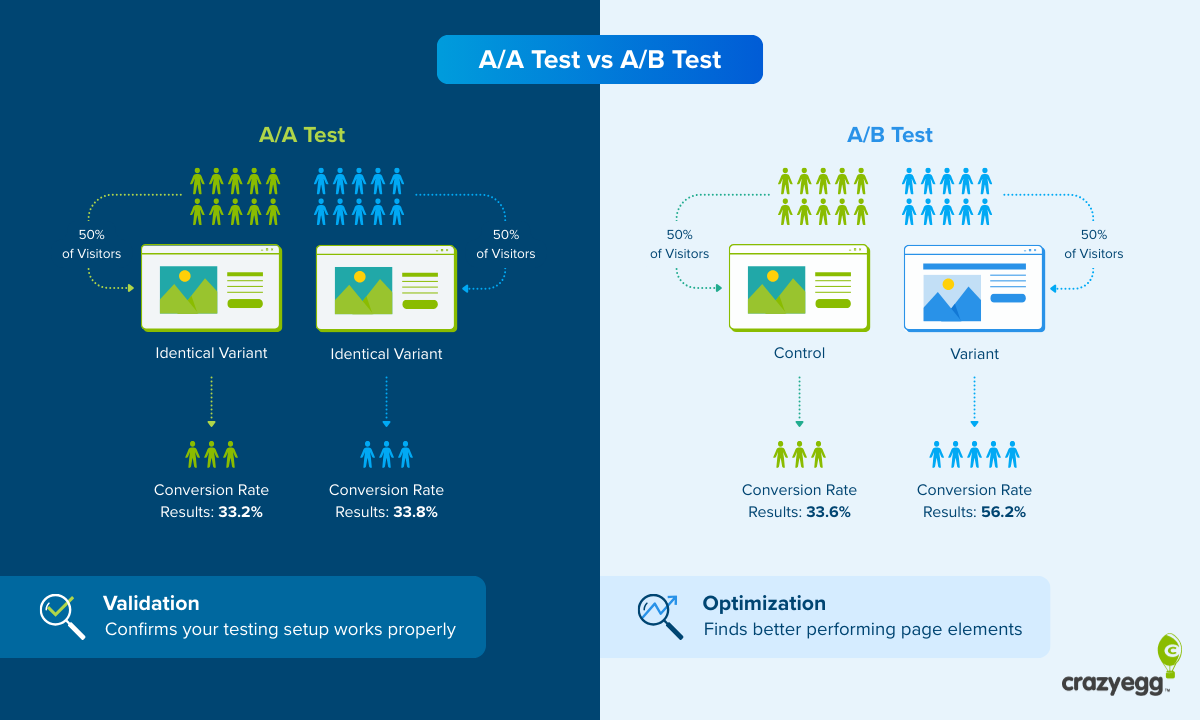

The basic framework for both tests is the same: you split traffic between two versions of a web page to see which one gets the most conversions, like sales, signups, or activations.

Whichever version of the page has the better conversion rate is deemed the winner.

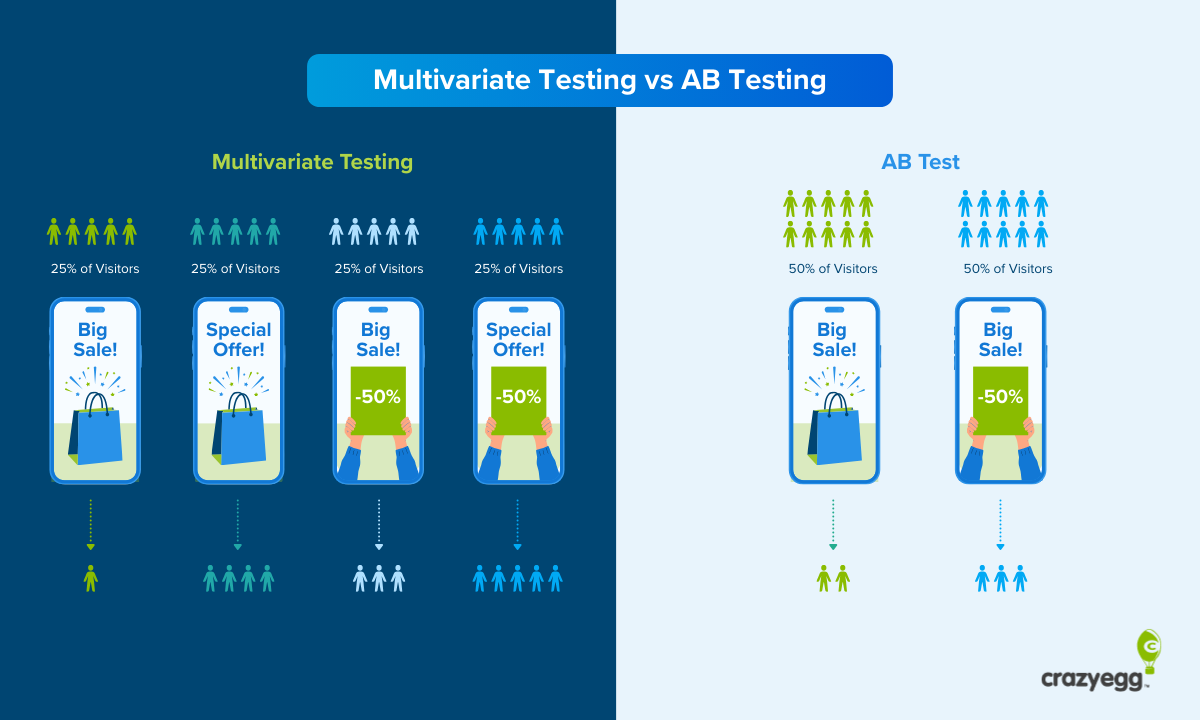

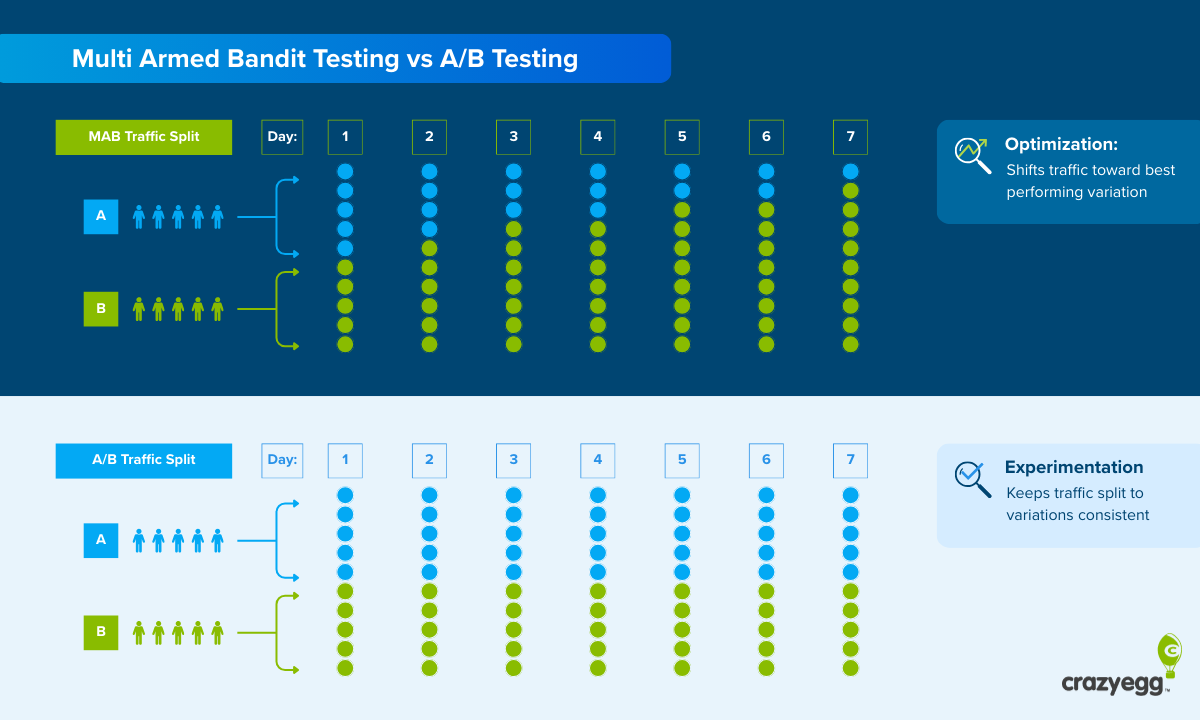

The major difference between these testing methods is in how the traffic is split between the different versions:

- During A/B testing, traffic is split evenly between the different versions for the entire duration of the test. 50% of website visitors see the control (A) and 50% see the variation (B).

- During multi-armed bandit testing, traffic is shifted toward whichever version performs the best as the test goes on. If B is outperforming A, then the bandit will start showing B to more visitors than A.

The difference in how traffic is allocated between versions changes what each method can tell you.

An A/B test is a classic scientific experiment where both versions of your site run under the same conditions: the same amount of traffic, over the same time-period. Any external factors that influence your test should impact both versions equally. This means:

- You can isolate cause and effect, knowing with confidence that the change you made caused any difference in results you observe.

- You can analyze the performance differences of secondary metrics, because both versions were seen under the same conditions.

A multi-armed bandit test is more focused on optimization than rigorous scientific experimentation. Instead of holding conditions constant for both versions, it adapts based on what it learns and shows the better performing variation more often. This means:

- The test results can be influenced by early performance, which weakens your statistical confidence in the cause and effect.

- Secondary metrics are harder to evaluate because page versions were not seen under the same conditions

The difference in experimental design between these testing methods creates fundamental tradeoffs.

When you run multi-armed bandit testing, you prioritize speed and immediate results, but sacrifice some certainty about which version is truly the best.

A/B testing is the opposite. You accept slower progress and short-term losses to get a result that you can be extremely confident in.

Different types of A/B tests and bandits

So far, we’ve talked about testing one version of a website against another, but that’s not always the case.

Let’s walk through a few of the different ways both tests are used, as they will come up as we discuss when each one works best.

For A/B testing, you’ll see:

- Classic A/B testing: This is where you have 50% of your traffic go to the existing web page you are testing and 50% of the traffic go to a variation that has a single change. Because you only made one change, cause and effect is very clear.

- Split testing: Same set up as classic A/B testing, but the variation can be a much more drastic set of changes, such as a new layout or new design. Because you changed several things, the specific cause and effect of changes is less clear, though you can analyze the differences in performance between the two discrete experiences.

- A/B/n testing: This is where you test more than one variation along with your control, typically splitting traffic evenly between all of the variations.

Any of these A/B testing methods can use unequal traffic allocation. For example, you might send 95% of website visitors to your existing page and only 5% of traffic to the experimental page. This reduces risk because you can get a sense of how the variation performs and spot any bugs before rolling it out to half of your audience.

There are several different types of multi-armed bandit testing, which are differentiated based on the algorithm that determines which variation is shown.

For example, some MAB tests use Bayesian probability to model which variation has the highest likelihood of performing better, whereas others will use the epsilon-greedy algorithm that shows the best performing variation most of the time while reserving some traffic to show other variations to continue exploring alternatives.

There are also contextual bandits that look at additional factors to determine which version to show to different types of visitors. Contextual bandits consider user parameters such as:

- Device type

- Location

- Traffic source

- Time of day

- Day of week

For example, a contextual bandit might discover that one of the variations performs well with mobile users or traffic coming in from paid ads. It can optimize what it shows to specific user segments, which can improve your results during the test.

When To Use Multi-Armed Bandit Testing

Let’s walk through four common scenarios where it makes a lot of sense to use bandits.

- You need to optimize within a short window of time

- You can run variants indefinitely

- You know that learnings could change over time

- You think segment differences matter a lot

For each scenario, I’ll explain why it’s a good idea and provide several real-world examples where bandits are often used.

1. You need to optimize within a short window of time

If your window of opportunity is shorter than the time it would take to gather enough data to make a decision with A/B testing (usually 2 weeks at least), then it makes sense to use bandits. This way you can start figuring out which variation is the best as fast as possible.

Some example scenarios:

- Flash sales where you must optimize sales within hours or days.

- Breaking news headlines where you want to figure out which one resonates with readers before the day is over.

- News stories where you need to figure out how to order stories on your homepage or which to send as push notifications.

- Influencer marketing where they post an Instagram story linking to your landing page and you have 24 hours to maximize conversions.

- Last-minute event sales where the value of seats or tickets drops to $0 once it starts.

In these cases, there is only value in a short window of time, so learning quickly is the only smart objective.

You can’t possibly benefit from the rigor of A/B testing in such a short-timeframe. By the time you learned anything with statistical significance, you would have missed your opportunity.

2. You can run variants indefinitely

When there is low-to-no cost to keep multiple variations alive, you can use bandit testing like a permanent, automated optimizer. It just keeps running, constantly adjusting to shifts in user behavior without needing manual adjustments.

Some examples of when this can work:

- Marketing creative, like testing variations of microcopy, hero images, product photos, or promotional banners.

- Opt-in popups that test different incentives like discounts or gated content.

- Cross-sells at checkout to see whether “new arrivals” or “trending now” increases average order value.

- Low-stakes decisions like CTA button colors or shapes.

- Ad unit placement to see whether ads should be mid-article or in sidebar.

You need to be a little careful here because this “set it and forget it” style optimization can go off the rails in the wrong situation. Bandits work best for continuous optimization when:

- Outcomes are immediate: If sales take days or weeks to happen, the bandit is going to struggle optimizing conversions in real-time.

- Technical debt is low: If the cost of maintaining variations is high from a development perspective, running the bandit indefinitely is problematic.

- Conditions are relatively stable: If your traffic mix is volatile, it’s harder for the bandit to make good decisions about what’s working.

- The stakes are low enough to tolerate mistakes: If the cost of being wrong is expensive or risky, this strategy can backfire.

When these criteria align, running a multi-armed bandit indefinitely makes a lot of sense. When even one of them isn’t true, you have to be a lot more cautious.

3. You know that learnings could change over time

One of the key benefits of A/B testing is understanding cause→effect. But if you are testing something that could change next month, next quarter, or next year, then you aren’t really going to be so sure that your learning will hold up over time.

Since there isn’t likely to be a permanent winner, the payoff from A/B testing won’t be as valuable. It makes sense to use bandit testing in these cases to capitalize on what’s working at the moment.

For example:

- Seasonal promotions where what worked in spring won’t hold true in summer

- During a product launch, major ad campaign, or live event, when your traffic mix is likely to change.

You should be cautious about reading too much into the winners that bandit tests discover in these situations. The result might not help you in the future. But when you expect conditions to change, bandits are useful for exploiting the present moment.

4. You think segment differences matter a lot

Sometimes the difference between user segments is dramatic even where the overall difference between variants is relatively low. For example headline A might beat headline B by 2% overall, but mobile users prefer it by 15% whereas desktop users prefer headline B by 13%.

When such segment differences really matter, you can use contextual multi-armed bandit testing to figure out which variant works best for each group.

Some tests where contextual bandits can help you exploit segment differences include:

- Finding out which incentives work best for different geographies or traffic sources

- Determining ideal content length for mobile and desktop users

- Seeing what types of social proof works best for new vs returning users

Using bandit testing in these situations requires that the technical debt be low, and that user segments are stable enough for the learnings to hold true consistently. If your evening LinkedIn traffic differs from your lunchtime LinkedIn crowd, for example, it could easily mess up your bandit’s ability to optimize conversions effectively.

When To Use A/B Testing

Here are some of the scenarios where A/B testing is more valuable or less risky to run than bandit testing:

- You need to make a long-term strategic decision

- You know that timing influences behavior

- You have long sales cycles

- You need to track competing metrics to determine success

I’ll explain it works better in these scenarios and give you a few real-world examples where teams opt to use why A/B testing.

1. You need to make a long-term strategic decision

When you want to make major changes to your site that will endure for a long time, it’s less important to squeeze a few extra conversions from the test than it is to identify the winning variation with a high-degree of confidence.

A/B testing is ideal, even if it is slower and less profitable than bandit testing, because the stable traffic allocation enables you to run real hypothesis testing. You can be a lot more confident that your outcome will hold true over time.

It also lets you more accurately interpret the effects on secondary metrics, like churn, refunds, or profit margins, which are critical to consider over the long-run.

Some examples include:

- Website redesigns testing new layouts, navigation schemes, or checkout flows.

- Major pricing page changes, like tier structures or anchoring strategies.

- Brand messaging changes, like new positioning or unique selling propositions.

- Content strategy pivots, like trying educational vs conversion-focused content.

The benefit of A/B testing here is that you can know for sure that the change you made caused the result you see. In these situations, the reward of a short-term conversion lift you might get from a bandit test is outweighed by the long-term implications of the change you are considering.

One thing to think about is using bandits early in the CRO testing process where you are coming up with changes that you want to eventually A/B test. You might be able to use bandits to rule out some ideas quickly, before committing to a full A/B test.

2. You know that timing influences behavior

If you know that user behavior changes based on the time of day, day of week, the stable traffic allocation you get with A/B testing is really beneficial. You expose all the variations to the same mix of conditions, which means you will average out those differences and find a real winner.

By contrast, MAB testing will be influenced by those timing conditions, potentially mistaking temporary effects for stable user preferences.

In other words, A/B testing will find the real winner despite of timing differences, whereas MAB might declare a false winner because:

- It happened to explore one variant during a weekend while your weekday traffic has the higher intent.

- You ran a promotion during the test that created a surge of urgent traffic while it was exploring one of the variations.

- It disproportionately showed one variant during business hours when intent was higher.

There are ways to account for this using more sophisticated algorithms, but it makes it harder for bandits to learn quickly, which is one of their key advantages. By default, A/B testing should neutralize these timing differences, giving you more credible insight into which version actually performs better.

3. You have long sales cycles

A/B testing is usually the better choice when conversions don’t happen right away. So if the thing you care about takes days, weeks, or months to occur, a bandit doesn’t get useful feedback fast enough to adjust its behavior in a productive way.

Some examples:

- A B2B website where visitors typically talk to sales and convert weeks later.

- High-ticket purchases where customers research, compare, and visit the site multiple times before buying.

- When you have a free trial plus money-back guarantee, so you can’t tell which conversions are profitable for 30-90 days.

What makes A/B testing useful here is that it doesn’t depend on immediate feedback. You can hold the traffic steady, wait for outcomes, and then compare results when you have enough meaningful data.

Bandits will struggle because the immediate feedback may be misleading. A bunch of whitepaper downloads or completed lead-gen forms signal early success, for example, but those early signals may not translate to real profits at the end of a long sales funnel.

4. You need to track competing metrics to determine success

Since A/B testing keeps traffic allocation stable, you can analyze differences in secondary metrics to see if gains in your primary metric are offset by losses elsewhere. Bandits are great for optimizing a single website KPI, but when you need to track more than one, it’s harder for them to give you good data for analyzing tradeoffs.

Some examples:

- Changing a pricing page to increase sales, but not at the expense of average order value or margin.

- Rotating incentives on an opt-in popup to capture more leads, but not at the expense of lead quality.

- Simplifying a checkout flow to boost the completion rate without also increasing the refund or return rate.

It’s much more difficult to assess these types of tradeoffs when you run a bandit that is constantly shifting traffic according to a primary metric. It is possible to track additional metrics and use that data to inform the bandit’s decision-making, but you really need to know what you are doing in order to set up those guardrails.

Using Multi-Armed Bandit and A/B Testing

As you can see, these are two distinct tools that teams can use to figure out the best way forward on their site.

Here are some different ways that teams use both bandits and A/B tests to increase conversions and improve user experience on their site:

- Validate then optimize: Use A/B testing to find the big picture changes and then use bandits to uncover slight variations that perform the best. For example, A/B testing a landing page to find out that long-form content converts better than short-form, then using bandits to test smaller copy and typography elements.

- Validate the bandit: Run a multi-armed bandit to find your winner quickly but then validate that winner with an A/B against your existing page.

- Parallel testing: Use A/B testing for strategic tests and bandits for tactical decisions. For example A/B testing page structures and messaging, while bandit testing different hero images or promotional banners.

There are many different ways to incorporate both testing methods into your overall strategy. I’d recommend exploring the sites and blogs of CRO experts, all of whom use testing as a core part of their strategy.

Analyzing Bandit and A/B Test Results

The conversion rate tells you which variation performed the best, but it doesn’t really explain why. Analyzing user behavior and taking a deeper look at customer engagement metrics can help you start to figure out what drove users to select one version over another.

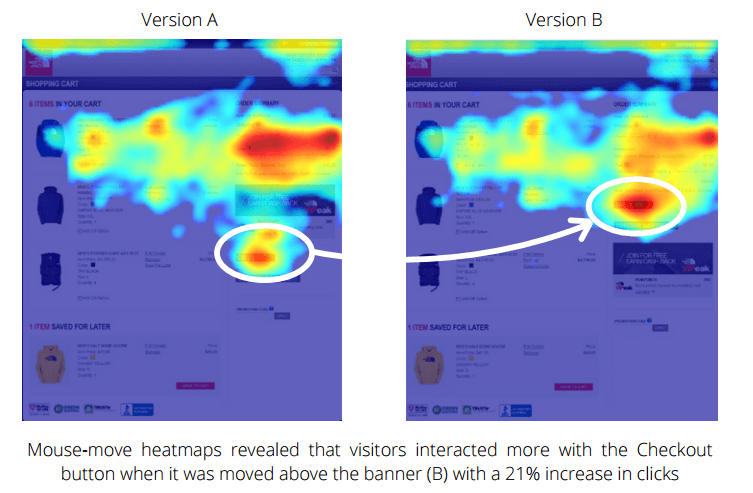

You can use tools like heatmaps, which show where users clicked or how far down the page they scrolled. What drew their attention? What did they ignore?

Session recordings show you exactly how users navigated the page. Where did they linger? What seemed to confuse them?

You can also use surveys to get direct feedback from users. What were they trying to accomplish? How easy did they feel the page was to use?

Analyzing an A/B test is very straightforward. All versions saw the same amount of traffic under the same conditions. Good A/B testing tools make it possible to dig into the engagement and behavioral data for each version.

With bandit testing, analysis is a little bit more complex as the dynamic traffic split makes comparison less apples-to-apples. Still, you can use heatmaps, session recordings, and survey data to get a better sense of what drove users to take the actions you observe in the test results.