AI landing page analyzers review your page’s copy and design and tell you how to improve conversions.

That’s the idea, at least.

When I learned about them, I was instantly curious. I’ve seen firsthand how a good human landing page audit can multiply conversions. This struck me as a potentially inexpensive and very lucrative AI use case.

To see if they actually deliver, I ran a Crazy Egg feature page through every tool I could find. I gave each one a score based on five criteria and split-tested the winner.

The results were … let’s go with “interesting.”

How AI Landing Page Auditors Work

On the surface, the workflow is simple: input the URL of your landing page, wait a minute or two, and receive a set of CRO suggestions.

Look under the hood, and it becomes clear why they’re so promising. There are three key technologies at play: computer vision, custom RAG or LLM training, and heuristic-AI combinations. It’s likely that all the tools I tested use a combination of these to some degree.

Here’s how these technologies work:

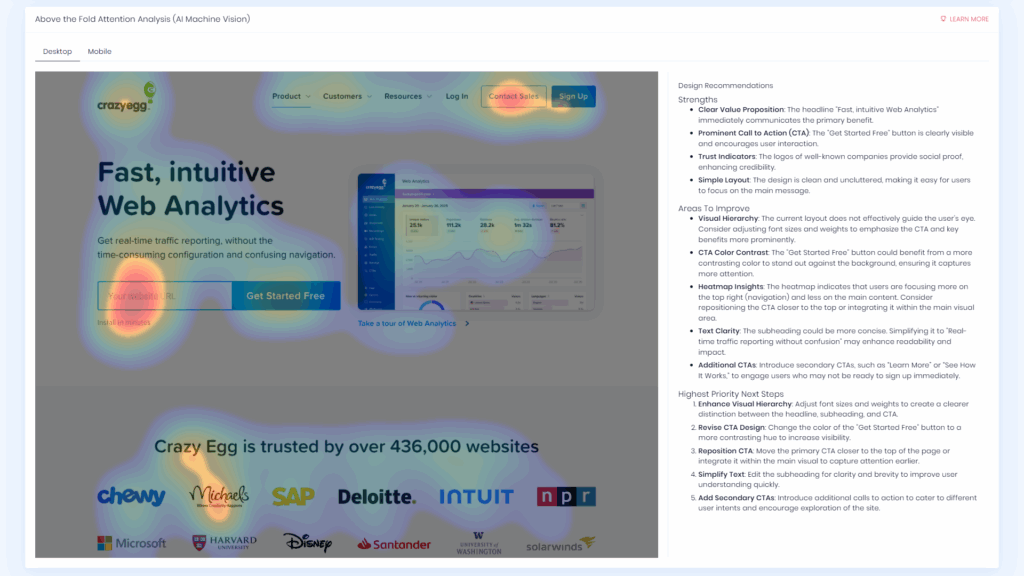

- Computer vision: This is the ability of AI to interpret images. It’s important because it makes an understanding of visual structure possible. A computer vision model converts images to structured data, which an LLM then interprets. Ad Alchemy, for example, uses computer vision to simulate a heatmap as part of its above-the-fold analysis.

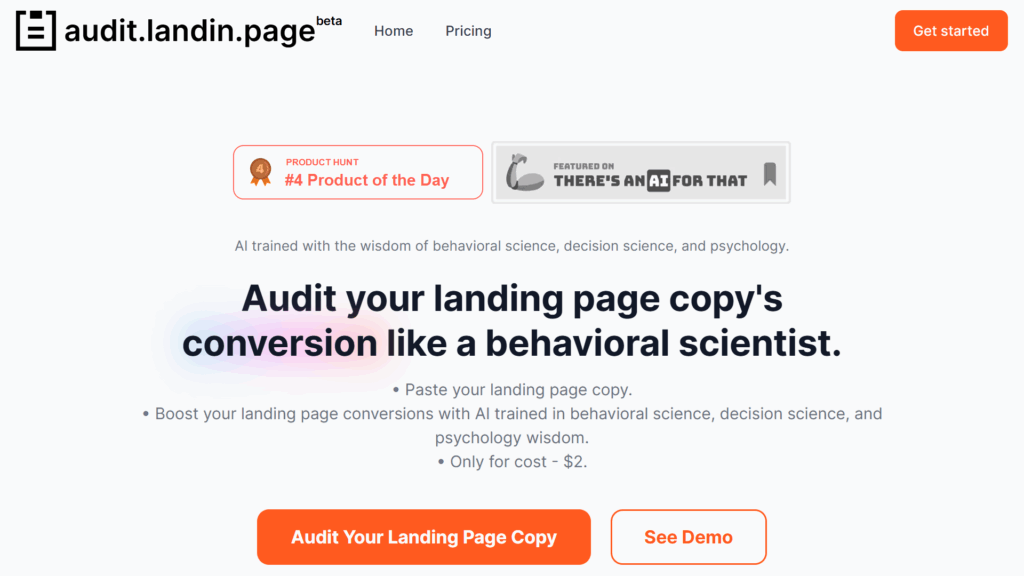

- Unique training data: This involves training a custom LLM on UX, CRO, and behavioral psychology data, research, along with in-house test results. In practice, this is most likely to take the form of retrieval augmented generation or RAG. In this system, the LLM fetches—or retrieves—relevant information from a knowledge base that contains relevant data and research. For example, access to large amounts of behavioural psychology research is the USP of audit.landin.page.

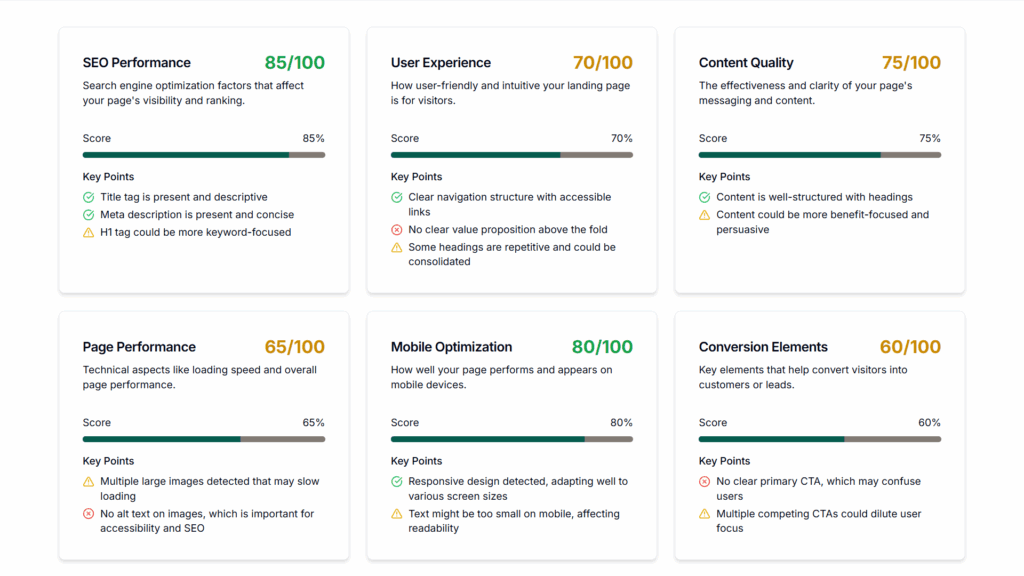

- Heuristic scoring: Rule-based logic works well alongside AI. An algorithm applies rule-of-thumb CRO benchmarks against an interpreted image and outputs a series of scores. AI then combines this heuristic analysis with more complex data analysis, offering suggestions where required. The real innovation is the ability of the LLM to analyze potentially hundreds of heuristics and pick out the most relevant ones based on a landing page’s audience and funnel stage. Expertise AI’s landing page auditor seems to combine heuristic scoring with AI analysis.

My Rating System: How I Scored the Tools

I gave each tool a score of zero to three for each of a set of five questions. Zero indicated exceptionally poor performance, while three signified quality that I would be happy with if it came from a human copywriter or CRO specialist.

Here are the questions I used in my evaluation:

- How comprehensive is the analysis? This looks at how much of the page the analysis covers—headlines, descriptions, images, CTAs, etc.

- How specific are the suggestions? For this, I’m looking for specific implementation guidelines that require a minimum of interpretation.

- How original are the optimizations? Stale visual design and copywriting advice like “Change your headline to ‘Unlock Revenue Gains’” will result in a lower score. Because AI models rely on consensus, I expected this to be an issue.

- How realistic is the implementation? Along with being specific, I actually need to be able to implement the advice. “Run a comprehensive A/B split test” (I received this suggestion) is practically useless.

- How many mistakes were made? If the analyzers misread the landing page, I will award a lower score.

In addition to the above, I imposed the following rules:

- I excluded VWO (one of the better-known analyzers) because it is a questionnaire-style analysis without any AI.

- I focused mainly on dedicated landing page analyzers, not full-suite ones, with a few exceptions for very popular tools.

- I avoided Custom GPTs. All entries are independent tools in their own right.

Results: Landing Page Analyzers

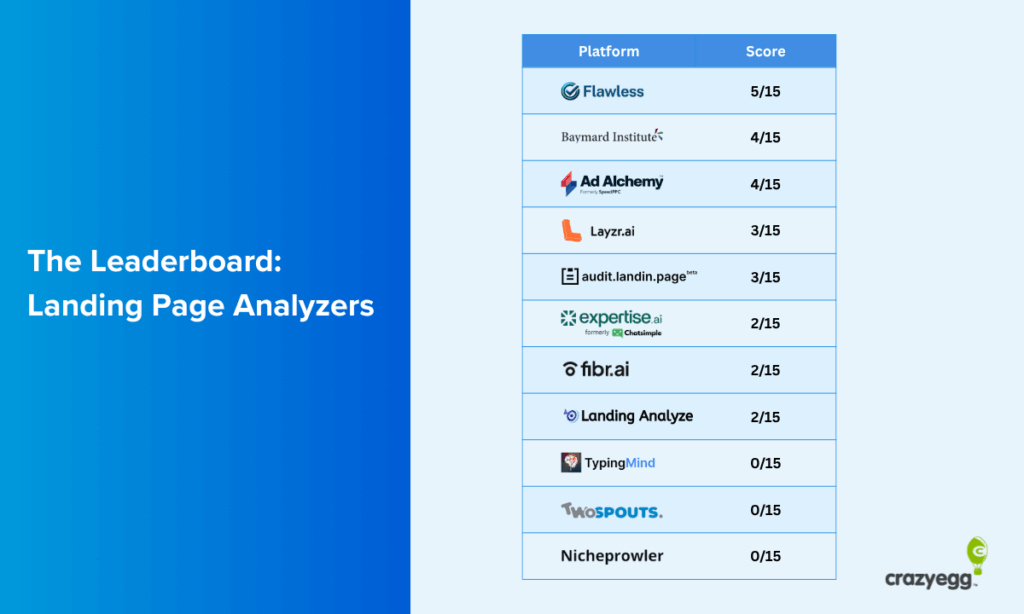

I won’t beat around the bush. The landing page analyzer results were universally poor.

Most offered only vague advice and the suggestions themselves would, in most cases, make any self-respecting CRO expert wince a little. The word “unlock” appeared more times than it had any right to.

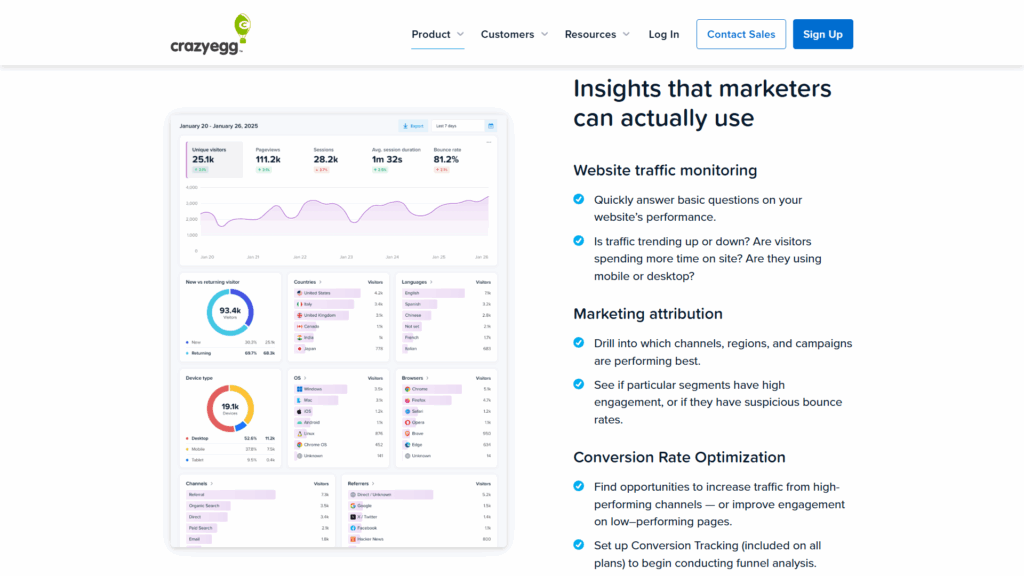

I used the Crazy Egg Web Analytics landing page to run the test. You’ll find a full-page screenshot in the split-test section later in the article.

Here’s a ranking of the tools (in descending order) with a summary of the audit they provided:

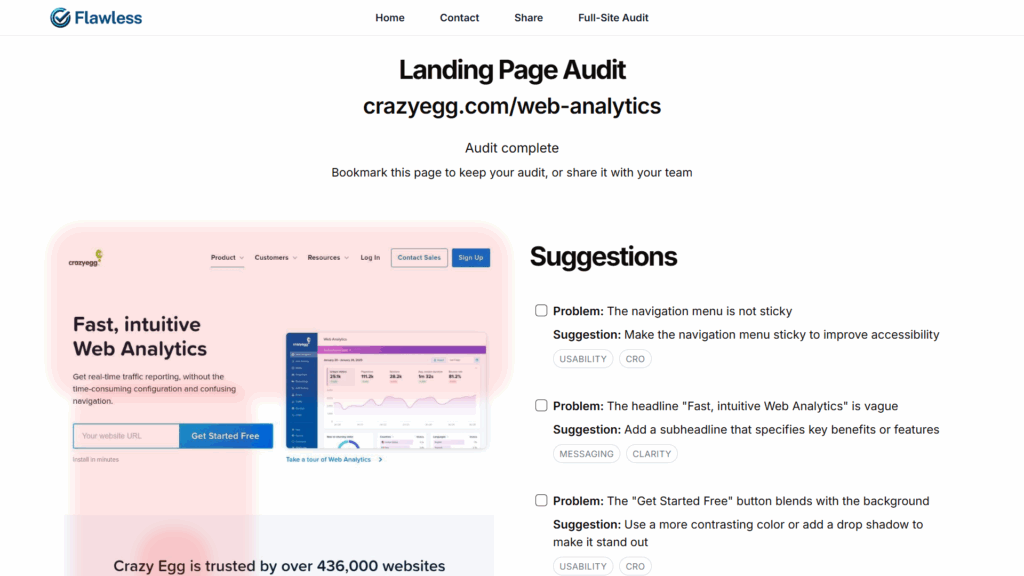

Flawless.is = 5/15

I paid $2.99 for a full audit with Flawless.While Flawless didn’t perform well on the comprehensiveness front, it did read the page reasonably well and offered specific suggestions. Out of all the tools I tried, this one was the most actionable.

Baymard Institute = 4/15

I had high hopes for Baymard’s UX-Ray 2.0 tool, but the advice, while seemingly based on solid UX principles, was largely unspecific, such as, “Use bespoke homepage imagery that engages users and that shows products in appealing lifestyle contexts.” For a purely UX audit, I can see the value here. For CRO, it is somewhat limited. The entry-level subscription is $199/month (I tested the free version).

Ad Alchemy = 4/15

Ad Alchemy gave some specific suggestions, like the advice to change the headline to “Unlock Your Website’s Potential with Fast, Intuitive Analytics.” Despite this, advice was on the whole vague—for example, ”The USP could be more prominent”—and not particularly comprehensive. There were only five page-wide optimization steps.

Layzr.ai = 3/15

The advice from Layzr.ai was adequate but, as with audit.landin.page, I suspect that this is an LLM wrapper. I was able to generate very similar responses with a basic ChatGPT prompt.

audit.landin.page = 3/15

I had high hopes for this tool. The analysis was extremely long. My suspicion is that the analyzer is a ChatGPT wrapper that’s sending a set of prompts via the API. There were some interesting insights—such as a description of the role of the “ambiguity effect” and “zero–risk bias”—but it was largely unimplementable.

Expertise AI = 2/15

Expertise AI provided simplistic, general results with a total of five suggestions, one of which was to “Include a prominent, single primary CTA above the fold to improve conversion rates.” This is a misreading of the page, which already has a primary CTA.

Fibr.ai = 2/15

It’s not really accurate at this stage to refer to “market leaders,” as the category for this type of tool is so nascent. However, Fibr.ai comes closest. It’s a growing startup and ranks first in Google for the term “landing page analyzer.”It was a disappointment. It gave basic, repetitive advice, and, while there was a degree of specificity, the optimizations were poor. It suggested changing the main headline to “Unlock Your Website’s Potential with Real-Time Analytics.”

Landing Analyze = 2/15

Advice from Landing Analyze was vague and generic. One suggestion was to “Add engaging visual elements that amplify emotional connection.” It provided both a “summary” and “detailed analysis,” with both lacking any real degree of depth.

TypingMind = 0/15

TypingMind provided only four very basic results for the hero section, one of which was “optimize copy” with “alternate headlines and subheadings.”

Two Sprouts = 0/15

Suggestions from Two Sprouts were somewhat specific but mostly wrong. It stated, for example, that “The following headline ‘Get more of what you want’ is too vague” (that’s not the headline) and “Consider mentioning that there is a free trial…” (the main CTA is “Get Started Free”).

NicheProwler = 0/15

NicheProwler offered a small number of very generic suggestions and even misread the page in certain places, such as misunderstanding the location of the primary CTA.

Split-Test With Flawless.is: Process and Results

Using manual rule of thumb criteria to evaluate landing page audit tools is one thing. But any CRO expert will tell you that the proof is in the pudding—the A/B-test pudding, to be precise.

With that in mind, I took all of the Flaweless.ai suggestions and created a variant. For the experiment, I’ll be testing the Crazy Egg Web Analytics page. Its current conversion rate of visitors to signups is 38%.

Step 1: Modify the Landing Page

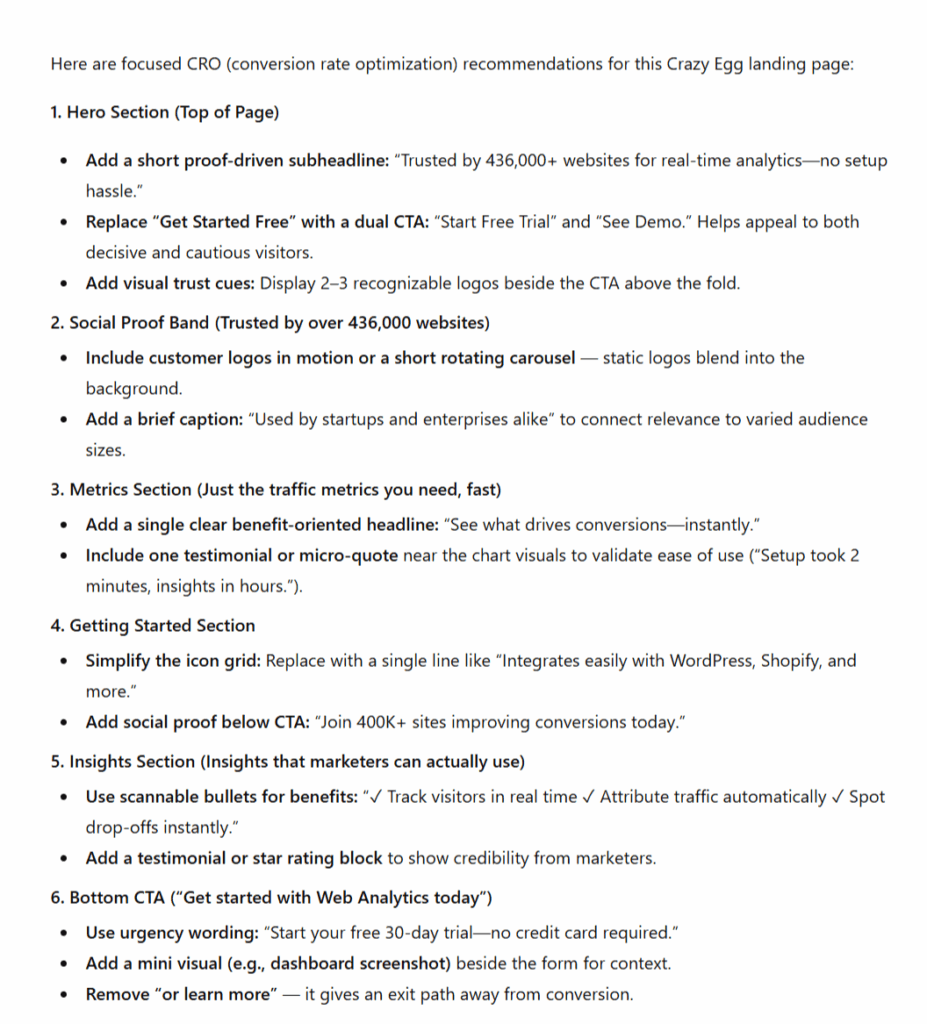

Flawless.is provided twelve optimizations in total, two of which I discarded because they came from a misreading of the landing page.

Here are all the suggestions:

- Implement a sticky (top) navigation bar to improve accessibility. (I ignored this. Navigation is sticky.)

- Use more whitespace and section dividers to improve flow.

- Describe benefits or key features in the subheadline with more specific language about what users gain.

- Use a more contrasting color to make the “Get Started Free” button stand out.

- Add a heading like “Trusted by Leading Brands” above the logos in the trust section. (I ignored this one—it has a header).

- Add a section with bullet points highlighting key benefits.

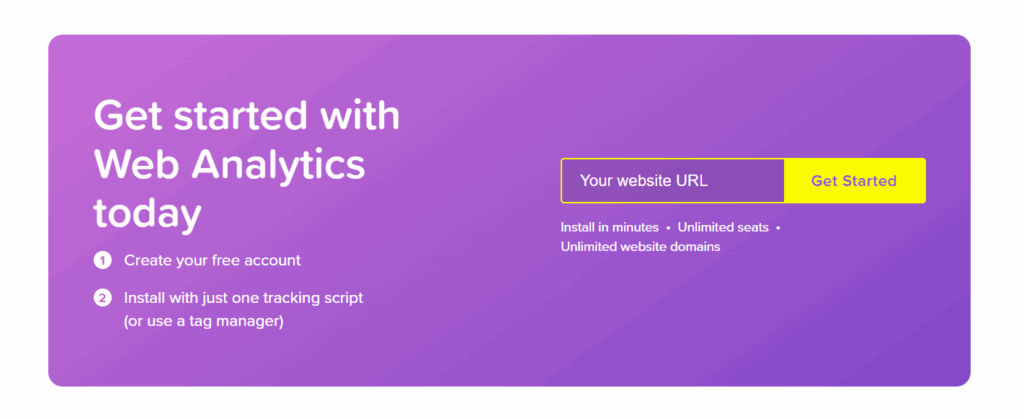

- Add a button or link to the “Get started in minutes” section to guide users to the next step.

- Use bullet points or icons to break up the text and improve readability in the “Insights that marketers can actually use” section.

- Add buttons or links to each tool for easy access in the “More tools to get started.”

- Increase the button contrast to make it more noticeable in the “Get started with Web Analytics today” section.

- Add a “Contact Us” or “Subscribe” button in the footer.

Step 2: The A/B Test

Here’s a full screenshot of the original landing page. Underneath, you’ll find a visual breakdown of the changes I made in line with the suggestions from Flawless.

For the hero section, I rewrote the subheadline to “specify benefits or features” and increased the contrast of the CTA button.

A bulleted section describing benefits was placed beneath the client logos:

More white space and breaks were added between feature sections:

Some of the feature sections were modified so text was shown in a bulleted format:

The final CTA was also changed to a higher contrast color.

Step 3: The Results

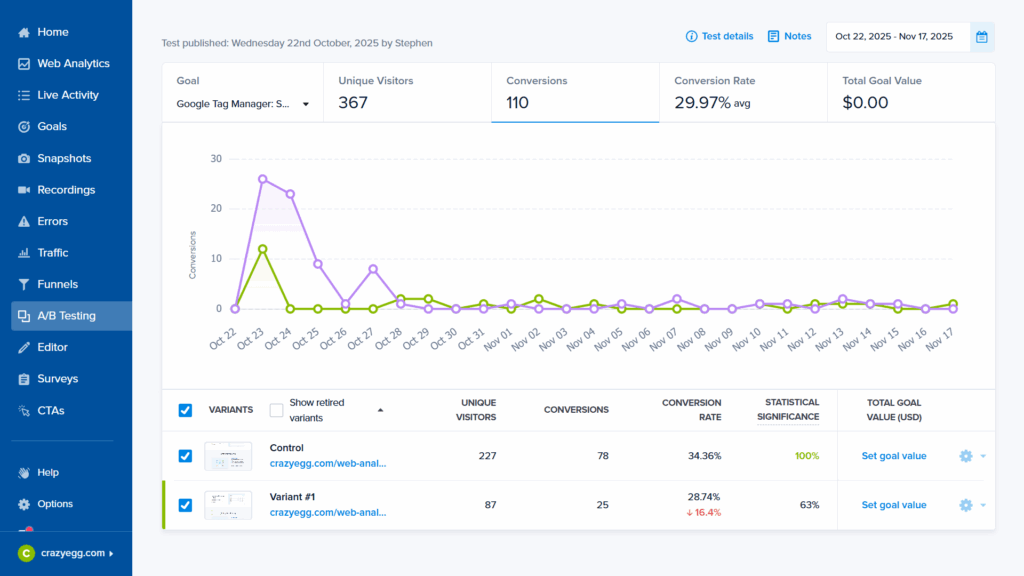

We ran the test for three weeks, after which the variant with the “optimizations” showed a decrease of 16.4% to the conversion rate of the original landing page.

While we would need longer to verify the exact conversion rate of the variant, we can say that it’s unlikely to improve upon the control, and there is a likelihood of a decrease.

Here are the final results:

- Conversion rate of control: 34.36%

- Conversion rate of variant: 28.74%

- Action measured: Email signups

I used the Crazy Egg A/B testing tool to run the experiment. The integrated page editor, which makes it easy to implement no-code visual and copy edits, was a big help in getting the variant up and running quickly.

Verdict: Mostly a Waste of Time for Now

The results speak for themselves. All AI landing page analyzers scored poorly on my human evaluation, and the best of the bunch had a negative effect in a real test.

So why did they all underperform given the promise of the underlying tech? I would argue that there are four key reasons for the lackluster results.

Context blindness

LLMs can’t truly interpret a landing page’s intent, audience, or funnel stage, so feedback often mismatches the page’s strategic purpose.

Out of all the tools I reviewed, one asked for information about my audience. Even then, it was optional. This should be a relatively easy problem to solve because optimizers can simply provide audience and funnel data, which can then be mapped to LLM datasets.

Constraints of computer vision

Landing page elements are often subtle. One of the big problems that computer vision has is that it requires huge amounts of visual training data (this is called data dependency).

If models aren’t trained on a comprehensive set of landing page elements, they can’t define them accurately, which makes it difficult to identify true cause and effect relationships.

Limited data sets

One of the issues that UX and CRO experts have traditionally faced when making optimization decisions is selecting from a vast, context-dependent set of heuristics and past empirical studies. It’s an advanced skill.

AI tools should in principle be able to help with this. They can draw from a large database to find only those guidelines and tests that are relevant. This could be done either through training custom LLMs or retrieval-augmented generation (RAG), which essentially inserts an external knowledge layer between the prompt and the LLM.

It seems that this data hasn’t yet been consolidated in a meaningful way in any single analyzer, including the one that advertised its access to consumer behavior research. Baymard’s tool performed best in this regard, but it was largely UX-focused and somewhat generic.

Poor prompt engineering

I suspect that all tools were relatively simple API wrappers. Responses suggested minimal prompt fine-tuning.

Interestingly, where it looked like prompts had been given sufficient attention, answers were much more detailed despite still suffering from the issues outlined—poor understanding of visual elements and context and a lack of supporting data.

Reasons for Optimism: Why You Should Watch These Tools

I didn’t go into this experiment expecting to be amazed. Nonetheless, I was disappointed by the results.

End of the story?

To the contrary. I think there are reasons to be optimistic. The tech is moving fast. If the right balance is found, it will open up a world of possibilities to both CRO specialists and non-experts alike.

There are several points to keep in mind:

- The underlying technology has a lot of potential: The ability of computer vision to recognize increasingly complex layouts and map this over to relevant CRO cause-effect data is only getting better.

- AI landing page analyzers are in a very early stage of development: Big-brand CRO solutions don’t seem to have picked up on these landing page analyzers in a significant way yet. However, Baymard’s involvement does indicate that this is changing. And there are lots of interesting tools in beta.

- They could be a godsend for smaller businesses: For me, the real value in these tools is for SMEs and high-ticket service businesses that don’t have access to the visitor numbers to conduct A/B split tests. The ease and effectiveness with which these tools could be used will, in my opinion, sustain demand.

- Synthetic agents will rock the space: Earlier this year, researchers at Cornell University matched the results of a human split test on Amazon using AI agents. This is a groundbreaking development. If AI agents can simulate human split tests and this functionality is integrated with easily accessible tech like this, businesses will be able to identify genuine conversion enhancers quickly and inexpensively.

So, what does all this mean for your business?

Apart from not firing your human CRO and copywriting team just yet, it’s worth monitoring this tech. There are lots of companies innovating in this space—CRO Benchmark deserves an honorable mention (it’s an ecommerce auditor, so wasn’t included)—and startups with solutions still in the beta stage (PPC.io is one example). I would also be remiss of me not to mention CrazyEgg’s AI landing page editor (also still in beta).

For now, running your landing page through ChatGPT with a well-written prompt will be enough to match what these tools can do and may offer some useful suggestions that your human team can test.

I was able to generate similar outputs, some of which are worth testing, with a simple prompt in ChatGPT 5:

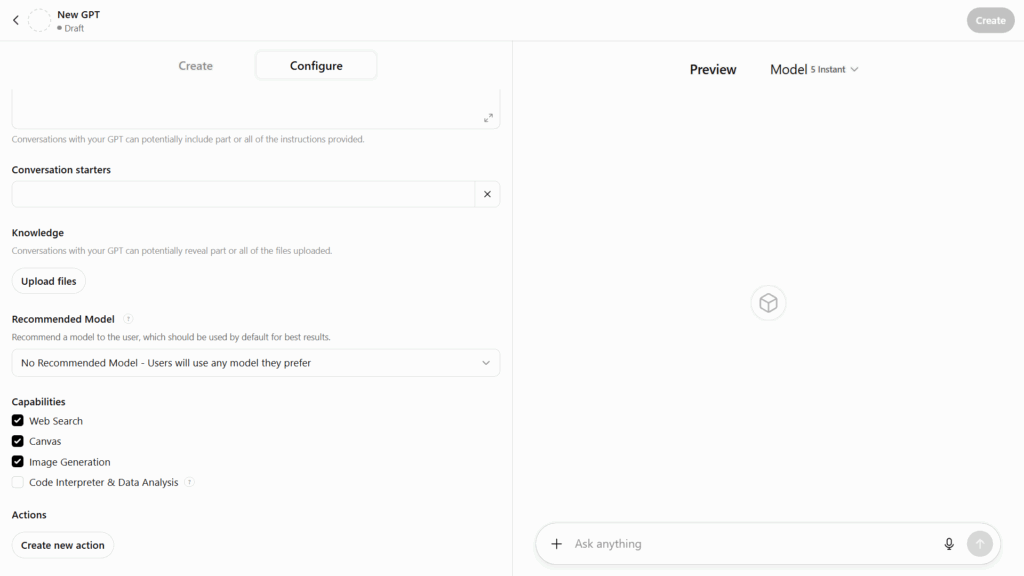

It’s also worth experimenting with custom GPTs to provide additional context to prompts. In particular, the “Knowledge” feature, which allows you to upload your own data, approximates more complex retrieval-augmented generation (RAG) systems on a smaller scale.

AI Tools Are Changing the CRO Tech Stack

Despite the lackluster results (to put it mildly), there is potential here.

Companies are working on improved versions of tech like this, and it’s likely that leading CRO and ad platforms will release their own versions.

AI is changing the CRO tech stack—AI-managed split tests are already common and AI personalization is whirring away behind the scenes of many marketing campaigns.

The best thing that CRO specialists, web admins, and business owners can do for now is to keep their ear to the ground and keep experimenting.My prediction is that they will change the way we design landing pages. It’s simply a matter of when.